Ever been requested a query you solely knew a part of the reply to? To offer a extra knowledgeable response, your greatest transfer could be to telephone a buddy with extra information on the topic.

This collaborative course of also can assist giant language fashions (LLMs) enhance their accuracy. Nonetheless, it’s been tough to show LLMs to acknowledge when they need to collaborate with one other mannequin on a solution. As an alternative of utilizing complicated formulation or giant quantities of labeled knowledge to spell out the place fashions ought to work collectively, researchers at MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL) have envisioned a extra natural method.

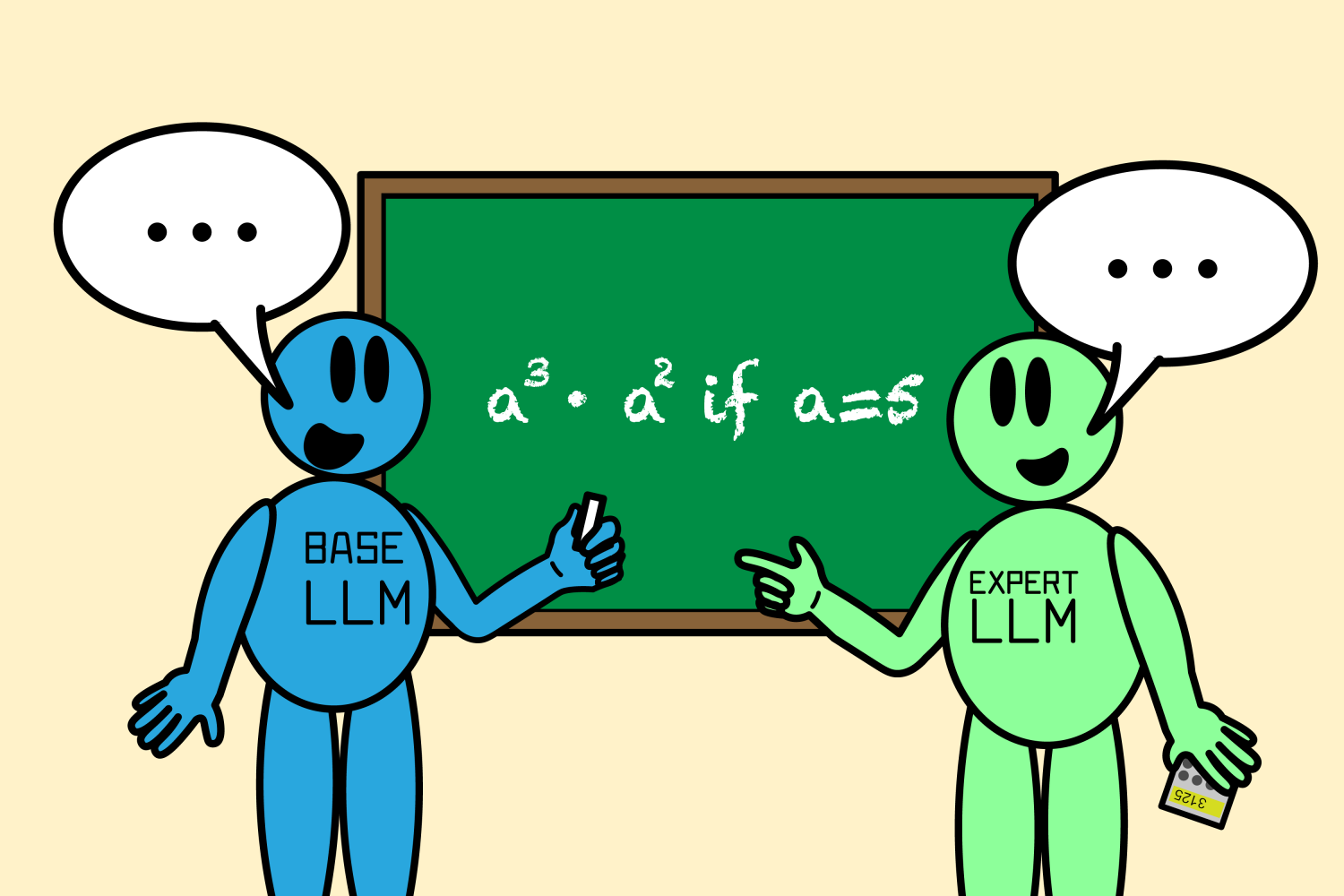

Their new algorithm, referred to as “Co-LLM,” can pair a general-purpose base LLM with a extra specialised mannequin and assist them work collectively. As the previous crafts a solution, Co-LLM critiques every phrase (or token) inside its response to see the place it may possibly name upon a extra correct reply from the skilled mannequin. This course of results in extra correct replies to issues like medical prompts and math and reasoning issues. For the reason that skilled mannequin isn’t wanted at every iteration, this additionally results in extra environment friendly response era.

To determine when a base mannequin wants assist from an skilled mannequin, the framework makes use of machine studying to coach a “change variable,” or a instrument that may point out the competence of every phrase inside the two LLMs’ responses. The change is sort of a challenge supervisor, discovering areas the place it ought to name in a specialist. For those who requested Co-LLM to call some examples of extinct bear species, as an illustration, two fashions would draft solutions collectively. The overall-purpose LLM begins to place collectively a reply, with the change variable intervening on the components the place it may possibly slot in a greater token from the skilled mannequin, similar to including the 12 months when the bear species grew to become extinct.

“With Co-LLM, we’re primarily coaching a general-purpose LLM to ‘telephone’ an skilled mannequin when wanted,” says Shannon Shen, an MIT PhD scholar in electrical engineering and pc science and CSAIL affiliate who’s a lead writer on a new paper concerning the method. “We use domain-specific knowledge to show the bottom mannequin about its counterpart’s experience in areas like biomedical duties and math and reasoning questions. This course of routinely finds the components of the information which are arduous for the bottom mannequin to generate, after which it instructs the bottom mannequin to change to the skilled LLM, which was pretrained on knowledge from an analogous discipline. The overall-purpose mannequin supplies the ‘scaffolding’ era, and when it calls on the specialised LLM, it prompts the skilled to generate the specified tokens. Our findings point out that the LLMs be taught patterns of collaboration organically, resembling how people acknowledge when to name upon an skilled to fill within the blanks.”

A mix of flexibility and factuality

Think about asking a general-purpose LLM to call the components of a particular prescription drug. It might reply incorrectly, necessitating the experience of a specialised mannequin.

To showcase Co-LLM’s flexibility, the researchers used knowledge just like the BioASQ medical set to couple a base LLM with skilled LLMs in several domains, just like the Meditron mannequin, which is pretrained on unlabeled medical knowledge. This enabled the algorithm to assist reply inquiries a biomedical skilled would usually obtain, similar to naming the mechanisms inflicting a selected illness.

For instance, in case you requested a easy LLM alone to call the components of a particular prescription drug, it could reply incorrectly. With the added experience of a mannequin that makes a speciality of biomedical knowledge, you’d get a extra correct reply. Co-LLM additionally alerts customers the place to double-check solutions.

One other instance of Co-LLM’s efficiency increase: When tasked with fixing a math drawback like “a3 · a2 if a=5,” the general-purpose mannequin incorrectly calculated the reply to be 125. As Co-LLM educated the mannequin to collaborate extra with a big math LLM referred to as Llemma, collectively they decided that the proper answer was 3,125.

Co-LLM gave extra correct replies than fine-tuned easy LLMs and untuned specialised fashions working independently. Co-LLM can information two fashions that have been educated otherwise to work collectively, whereas different efficient LLM collaboration approaches, similar to “Proxy Tuning,” want all of their element fashions to be educated equally. Moreover, this baseline requires every mannequin for use concurrently to supply the reply, whereas MIT’s algorithm merely prompts its skilled mannequin for explicit tokens, resulting in extra environment friendly era.

When to ask the skilled

The MIT researchers’ algorithm highlights that imitating human teamwork extra intently can improve accuracy in multi-LLM collaboration. To additional elevate its factual precision, the workforce might draw from human self-correction: They’re contemplating a extra sturdy deferral method that may backtrack when the skilled mannequin doesn’t give an accurate response. This improve would enable Co-LLM to course-correct so the algorithm can nonetheless give a passable reply.

The workforce would additionally wish to replace the skilled mannequin (through solely coaching the bottom mannequin) when new data is on the market, protecting solutions as present as attainable. This might enable Co-LLM to pair essentially the most up-to-date data with sturdy reasoning energy. Finally, the mannequin might help with enterprise paperwork, utilizing the most recent data it has to replace them accordingly. Co-LLM might additionally prepare small, non-public fashions to work with a extra highly effective LLM to enhance paperwork that should stay inside the server.

“Co-LLM presents an attention-grabbing method for studying to decide on between two fashions to enhance effectivity and efficiency,” says Colin Raffel, affiliate professor on the College of Toronto and an affiliate analysis director on the Vector Institute, who wasn’t concerned within the analysis. “Since routing selections are made on the token-level, Co-LLM supplies a granular approach of deferring tough era steps to a extra highly effective mannequin. The distinctive mixture of model-token-level routing additionally supplies a substantial amount of flexibility that comparable strategies lack. Co-LLM contributes to an vital line of labor that goals to develop ecosystems of specialised fashions to outperform costly monolithic AI methods.”

Shen wrote the paper with 4 different CSAIL associates: PhD scholar Hunter Lang ’17, MEng ’18; former postdoc and Apple AI/ML researcher Bailin Wang; MIT assistant professor {of electrical} engineering and pc science Yoon Kim, and professor and Jameel Clinic member David Sontag PhD ’10, who’re each a part of MIT-IBM Watson AI Lab. Their analysis was supported, partly, by the Nationwide Science Basis, The Nationwide Protection Science and Engineering Graduate (NDSEG) Fellowship, MIT-IBM Watson AI Lab, and Amazon. Their work was introduced on the Annual Assembly of the Affiliation for Computational Linguistics.