Have you ever ever deployed an AI mannequin, solely to find it is delivering surprising ends in a real-world setting? Monitoring fashions is as essential as their deployment. That is why we’re excited to introduce Inference Tables to simplify monitoring and diagnostics for AI fashions. Inference Tables allow you to repeatedly seize enter and predictions from Databricks Mannequin Serving endpoints and log them right into a Unity Catalog Delta Desk. You possibly can then leverage current information instruments, together with Lakehouse Monitoring, to watch, debug, and optimize your AI fashions.

Inference Tables are a fantastic instance of the worth you get when doing AI on a Lakehouse platform. With none extra complexity and value, you’ll be able to allow monitoring on each deployed mannequin. This lets you detect points early and take speedy motion, akin to by retraining, to constantly obtain the most effective enterprise outcomes out of your AI fashions.

To allow Inference Tables, fill out the signup type or contact your Databricks consultant.

“Databricks Lakehouse AI gives us with a unified atmosphere to coach, deploy, and monitor numerous machine studying fashions. Utilizing Inference Tables, we’re capable of monitor and debug deployed fashions which assist us to keep up the mannequin efficiency over time. That is enabling us to ship improved fashions for a greater buyer expertise on Housing.com”

— Dr. Anil Goyal, Principal Information Scientist at Housing.com

What’s an Inference Desk?

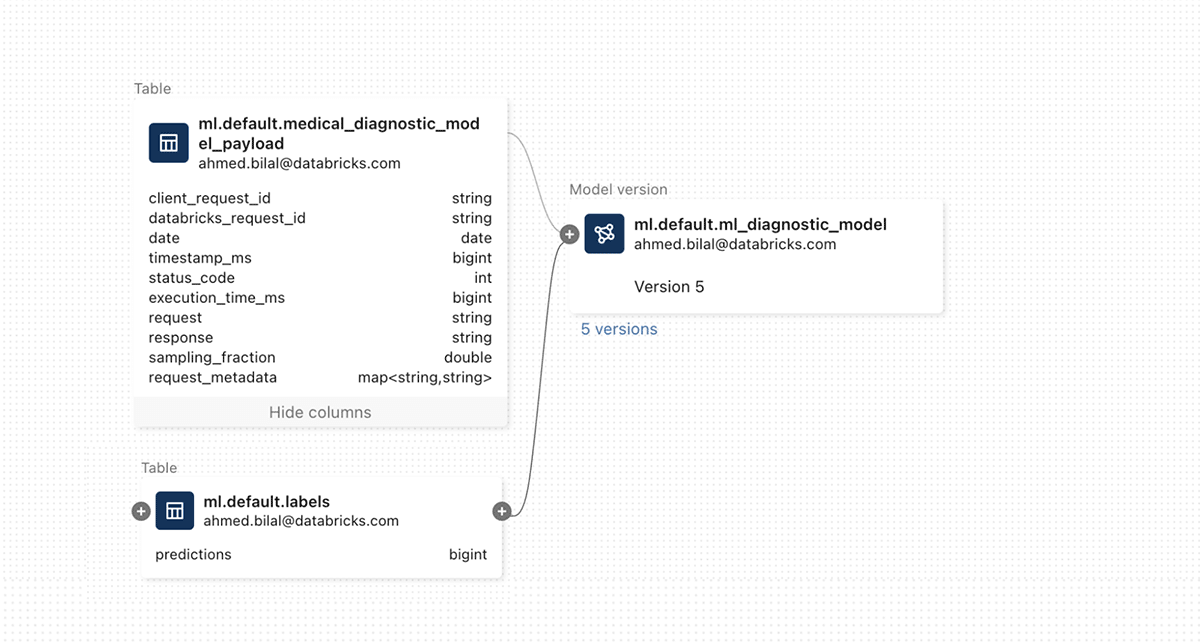

Inference Tables are a Unity Catalog-managed Delta Desk that shops on-line prediction requests and responses from mannequin serving endpoints. As soon as enabled, Inference Tables turn out to be a strong instrument for numerous use instances, together with monitoring, debugging, creation of coaching corpora, and compliance audits. As an alternative of making separate instruments for every function, Inference Tables present a desk illustration of a mannequin in order that constant governance and monitoring instruments can be utilized throughout all phases of ML.

You possibly can merely allow Inference Tables on each current and new model-serving endpoints with a single click on. Prediction requests and responses will start logging into the Unity Catalog Ruled desk, making it straightforward to find, govern, and share these Tables with groups.

What are you able to do with Inference Tables?

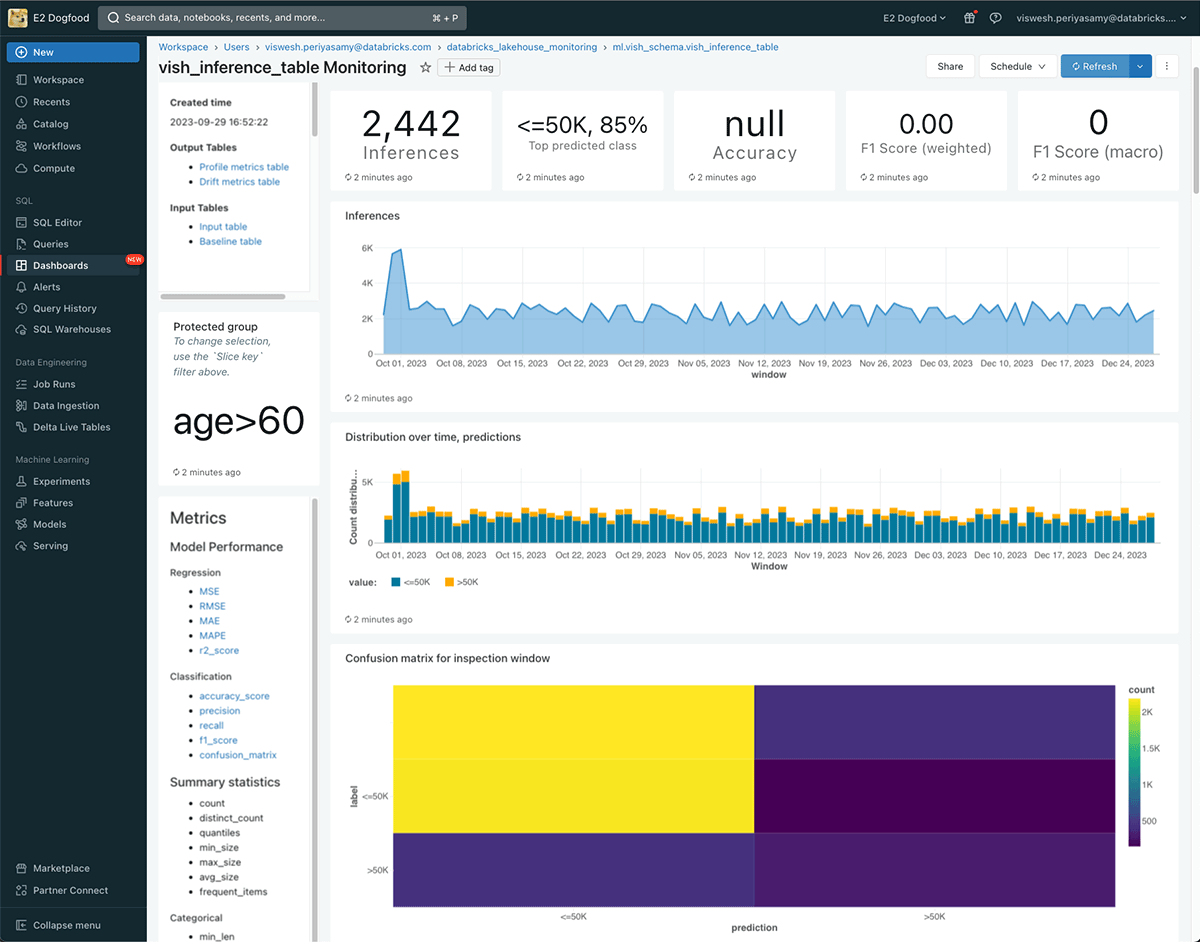

Monitor Mannequin High quality with Lakehouse Monitoring

You possibly can repeatedly monitor your mannequin efficiency and information drift utilizing Lakehouse Monitoring—the primary monitoring resolution designed for each AI and information property. Lakehouse Monitoring mechanically generates information and ML high quality dashboards which might be simply shared with stakeholders. Moreover, you’ll be able to allow alerts to know when you might want to retrain your mannequin primarily based on basic shifts in information or reductions in mannequin efficiency. Because of this simplification, high quality now not has to stay an afterthought and might be enabled on all endpoints.

“With Databricks Mannequin Serving, we are able to now prepare, deploy, monitor, and retrain machine studying fashions, all on the identical platform. By bringing mannequin serving (and monitoring) along with the characteristic retailer, we are able to guarantee deployed fashions are all the time up-to-date and delivering correct outcomes. This streamlined strategy permits us to concentrate on maximizing the enterprise affect of AI with out worrying about availability and operational considerations.”

— Don Scott, VP Product Improvement at Hitachi Options

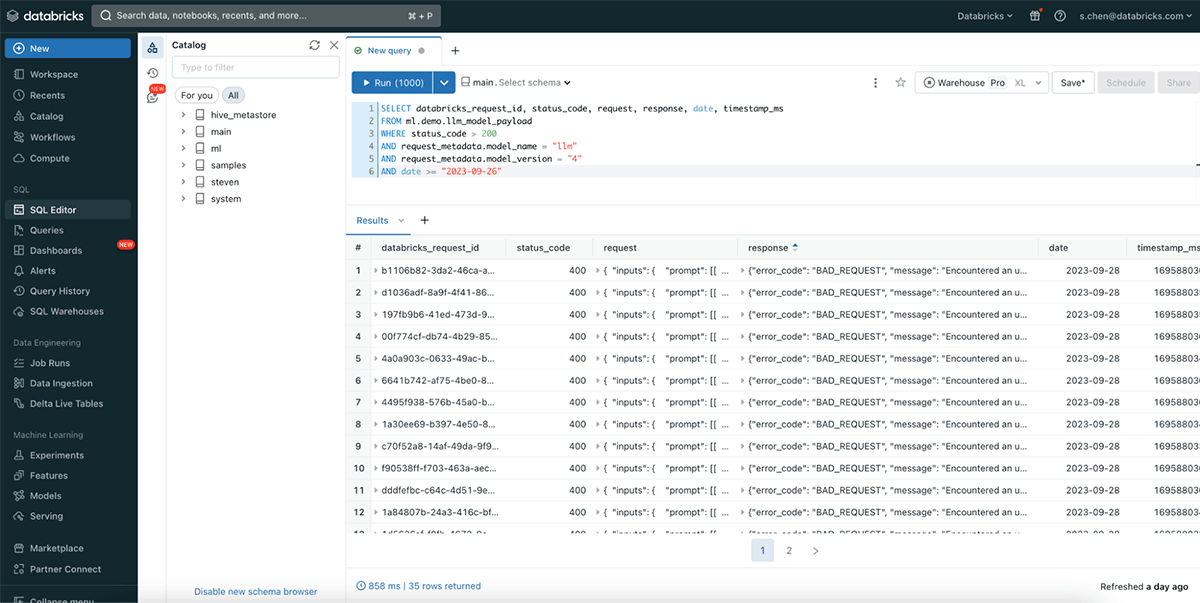

Debug Manufacturing Points

Inference Tables simplify the debugging course of by logging all of the vital information you want: HTTP standing codes, mannequin execution occasions, and different endpoint-specific particulars. The most effective half? There is not any must study new instruments. You possibly can debug endpoints by analyzing the Inference Desk with queries in Databricks SQL or use Python in a pocket book for superior post-analysis.

You may as well replay previous visitors to new fashions with the historic information in Inference Tables. With this functionality, you’ll be able to simply generate a side-by-side comparability of mannequin latencies. You possibly can additional tie this information into our LLM analysis suite to judge completely different LLMs for security and high quality.

Create Coaching Corpus to enhance AI fashions

Along with your information within the Unity Catalog, you’ll be able to simply be part of Inference Tables with floor fact labels to create a strong coaching corpus for bettering your fashions. If you do not have labels, you’ll be able to generate them via our partnership with Labelbox and simply mix them with Inference Tables. You possibly can then fine-tune these fashions and even automate retraining utilizing Databricks Workflows. This steady suggestions loop ensures you all the time acquire the most effective predictions out of your mannequin.

Subsequent Steps

- Allow Inference Tables for Mannequin Serving by signing up right here.

- Dive deeper into the Inference Tables documentation (AWS | Azure).

- Study extra about Databricks’ strategy to Monitoring right here