Weíre excited to announce that Meta AIís Llama 2 basis chat fashions can be found within the Databricks Market so that you can fine-tune and deploy on non-public mannequin serving endpoints. The Databricks Market is an open market that lets you share and change knowledge belongings, together with datasets and notebooks, throughout clouds, areas, and platforms. Including to the info belongings already provided on Market, this new itemizing gives on the spot entry to†Llama 2’s chat-oriented massive language fashions (LLM), from 7 to 70 billion parameters, in addition to centralized governance and lineage monitoring within the Unity Catalog. Every mannequin is wrapped in MLflow to make it straightforward so that you can use the MLflow Analysis API in Databricks notebooks in addition to to deploy with a single-click on our LLM-optimized GPU mannequin serving endpoints.†

What’s Llama 2?

Llama 2 is Meta AIís household of generative textual content fashions which are optimized for chat use instances. The fashions have outperformed different open fashions and signify a breakthrough the place fine-tuned open fashions can compete with OpenAIís GPT-3.5-turbo.†

Llama 2 on Lakehouse AI

Now you can get a safe, end-to-end expertise with Llama 2 on the Databricks Lakehouse AI platform:

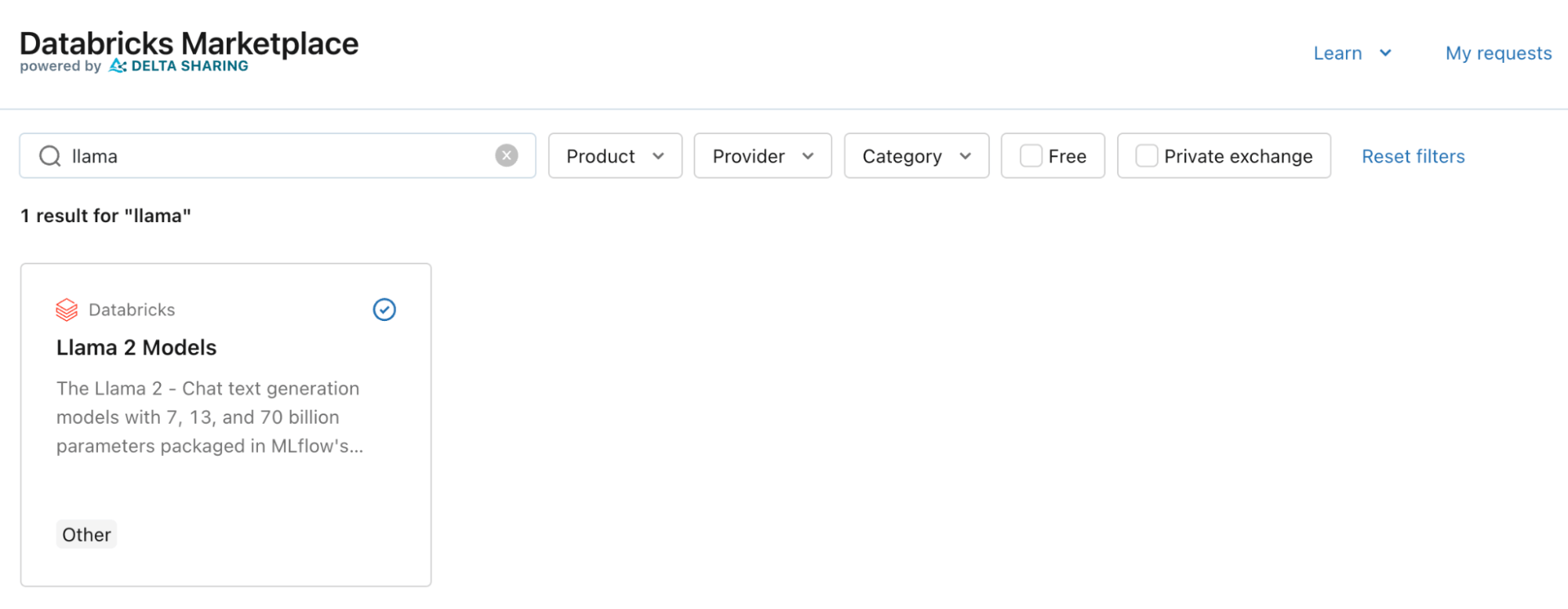

- Entry on Databricks Market. You possibly can preview the pocket book, and get on the spot entry to the chat-family of Llama 2 fashions from the Databricks Market. The Market makes it straightforward to find and consider state-of-the-art basis fashions you may handle within the Unity Catalog.†

- Centralized Governance within the Unity Catalog. As a result of the fashions at the moment are in a catalog, you mechanically get all of the centralized governance, auditing, and lineage monitoring that comes with the Unity Catalog in your Llama 2 fashions.

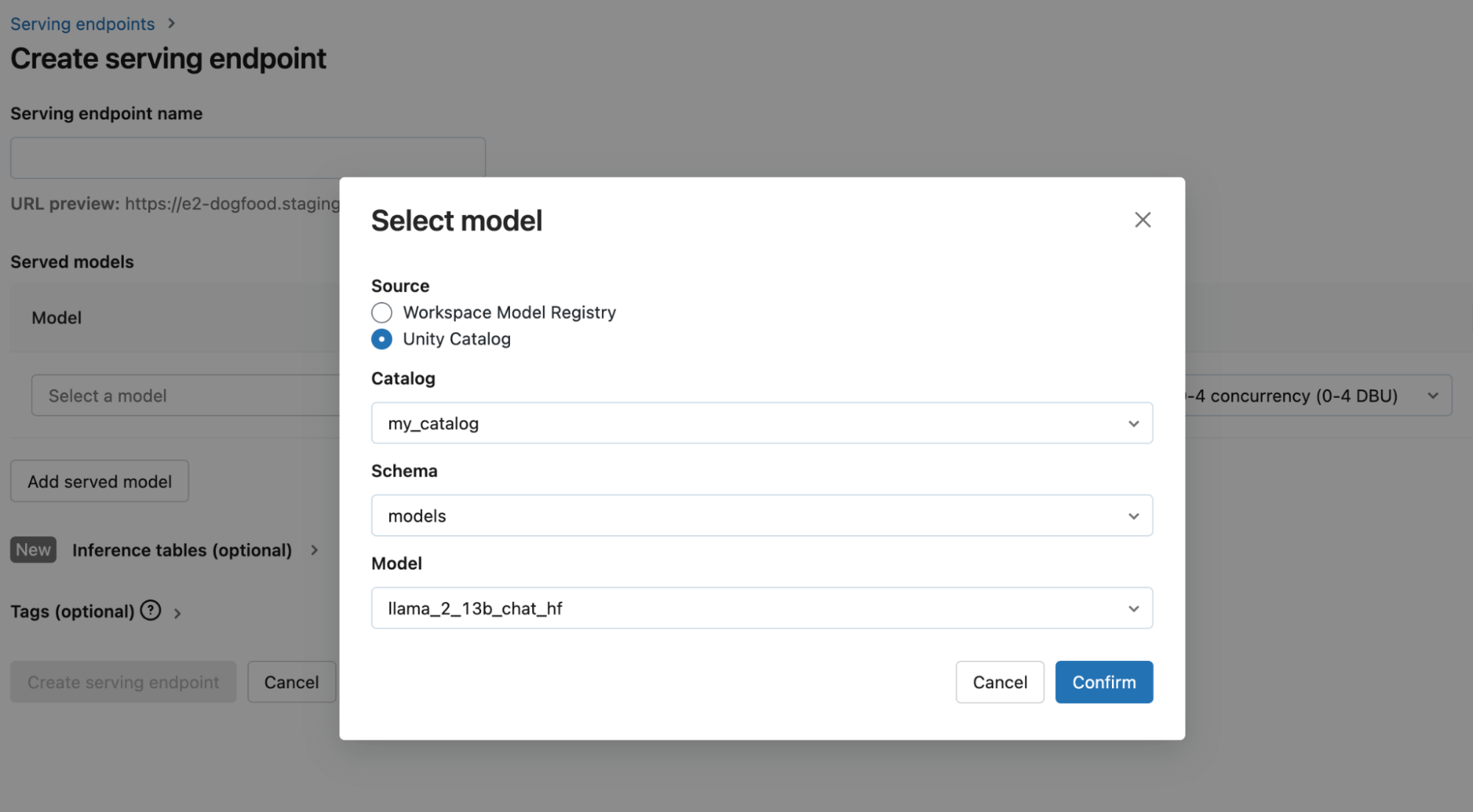

- Deploy in One-Click on with Optimized GPU Mannequin Serving. We packaged the Llama 2 fashions in MLflow so you may have single-click deployment to privately host your mannequin with Databricks Mannequin Serving. The now Public Preview of GPU Mannequin Serving is optimized to work with massive language fashions to offer low latencies and assist excessive throughput. This can be a nice choice to be used instances that leverage delicate knowledge or in instances the place you can not ship buyer knowledge to third-parties.†

- Saturate Personal Endpoints with AI Gateway. Privately internet hosting fashions on GPUs may be costly, which is why we suggest leveraging the MLflow AI Gateway to create and distribute routes for every use case in your group to saturate the endpoint. The AI Gateway now helps fee limiting for price management along with safe credential administration of Databricks Mannequin Serving endpoints and externally-hosted SaaS LLMs.†

- Attempt it your self: Launch the product tour to see tips on how to serve Llama 2 fashions from Databricks Market

Get Began with Generative AI on Databricks

The Databricks Lakehouse AI platform permits builders to quickly construct and deploy generative AI functions with confidence.

Keep tuned for extra thrilling bulletins quickly!