Availability is crucial characteristic

— Mike Fisher, former CTO of Etsy

ďI get knocked down, however I rise up once moreÖĒ

— Tubthumping, Chumbawumba

Each group pays consideration to resilience. The large query is

when.

Startups are inclined to solely handle resilience when their methods are already

down, typically taking a really reactive method. For a scaleup, extreme system

downtime represents a major bottleneck to the group, each from

the trouble expended on restoring operate and likewise from the affect of buyer

dissatisfaction.

To maneuver previous this, resilience must be constructed into the enterprise

targets, which can affect the structure, design, product

administration, and even governance of enterprise methods. On this article, weíll

discover the Resilience and Observability Bottleneck: how one can acknowledge

it coming, the way you may understand it has already arrived, and what you are able to do

to outlive the bottleneck.

How did you get into the bottleneck?

One of many first objectives of a startup is getting an preliminary product out

to market. Getting it in entrance of as many customers as doable and receiving

suggestions from them is often the very best precedence. If clients use

your product and see the distinctive worth it delivers, your startup will carve

out market share and have a reliable income stream. Nonetheless, getting

there typically comes at a value to the resilience of your product.

A startup could determine to skip automating restoration processes, as a result of at

a small scale, the group believes it will possibly present resilience by way of

the builders that know the system properly. Incidents are dealt with in a

reactive nature, and resolutions come by hand. Potential options may be

spinning up one other occasion to deal with elevated load, or restarting a

service when itís failing. Your first clients may even pay attention to

your lack of true resilience as they expertise system outages.

At certainly one of our scaleup engagements, to get the system out to manufacturing

shortly, the shopper deprioritized well being examine mechanisms within the

cluster. The builders managed the startup course of efficiently for the

few instances when it was crucial. For an essential demo, it was determined to

spin up a brand new cluster in order that there could be no externalities impacting

the system efficiency. Sadly, actively managing the standing of all

the companies working within the cluster was missed. The demo began

earlier than the system was totally operational and an essential element of the

system failed in entrance of potential clients.

Basically, your group has made an specific trade-off

prioritizing user-facing performance over automating resilience,

playing that the group can get well from downtime by way of guide

intervention. The trade-off is probably going acceptable as a startup whereas itís

at a manageable scale. Nonetheless, as you expertise excessive progress charges and

rework from a

startup to a scaleup, the dearth of resilience proves to be a scaling

bottleneck, manifesting as an rising prevalence of service

interruptions translating into extra work on the Ops aspect of the DevOps

groupís tasks, lowering the productiveness of groups. The affect

appears to look all of a sudden, as a result of the impact tends to be non-linear

relative to the expansion of the client base. What was just lately manageable

is all of a sudden extraordinarily impactful. Ultimately, the dimensions of the system

creates guide work past the capability of your group, which bubbles as much as

have an effect on the client experiences. The mix of diminished productiveness

and buyer dissatisfaction results in a bottleneck that’s laborious to

survive.

The query then is, how do I do know if my product is about to hit a

scaling bottleneck? And additional, if I learn about these indicators, how can I

keep away from or hold tempo with my scale? That’s what weíll look to reply as we

describe frequent challenges weíve skilled with our purchasers and the

options we now have seen to be simplest.

Indicators you might be approaching a scaling bottleneck

It is all the time troublesome to function in an atmosphere through which the dimensions

of the enterprise is altering quickly. Investing in dealing with excessive visitors

volumes too early is a waste of assets. Investing too late means your

clients are already feeling the results of the scaling bottleneck.

To shift your working mannequin from reactive to proactive, it’s important to

be capable to predict future habits with a confidence degree enough to

assist essential enterprise choices. Making information pushed choices is

all the time the aim. The secret is to search out the main indicators which can

information you to arrange for, and hopefully keep away from the bottleneck, fairly than

react to a bottleneck that has already occurred. Based mostly on our expertise,

we now have discovered a set of indicators associated to the frequent preconditions as

you method this bottleneck.

Resilience just isn’t a firstclass consideration

This can be the least apparent signal, however is arguably crucial.

Resilience is regarded as purely a technical downside and never a characteristic

of the product. Itís deprioritized for brand new options and enhancements. In

some circumstances, itís not even a priority to be prioritized.

Right hereís a fast check. Pay attention to the completely different discussions that

happen inside your groups, and be aware the context through which resilience is

mentioned. It’s possible you’ll discover that it isnít included as a part of a standup, however

it does make its manner right into a developer assembly. When the event group isnít

chargeable for operations, resilience is successfully siloed away.

In these circumstances, pay shut consideration to how resilience is mentioned.

Proof of insufficient give attention to resilience is commonly oblique. At one

shopper, weíve seen it come within the type of technical debt playing cards that not

solely arenít prioritized, however turn into a relentless rising checklist. At one other

shopper, the operations group had their backlog stuffed purely with

buyer incidents, nearly all of which handled the system both

not being up or being unable to course of requests. When resilience issues

will not be a part of a groupís backlog and roadmap, youíll have proof that

it isn’t core to the product.

Fixing resilience by hand (reactive guide resilience)

How your group resolve service outages could be a key indicator

of whether or not your product can scaleup successfully or not. The traits

we describe listed here are basically attributable to a

lack of automation, leading to extreme guide effort. Are service

outages resolved through restarts by builders? Underneath excessive load, is there

coordination required to scale compute cases?

Usually, we discover

these approaches donít comply with sustainable operational practices and are

brittle options for the following system outage. They embody bandaid options

which alleviate a symptom, however by no means really resolve it in a manner that enables

for future resilience.

Possession of methods will not be properly outlined

When your group is transferring shortly, creating new companies and

capabilities, very often key items of the service ecosystem, and even

the infrastructure, can turn into ďorphanedĒ Ė with out clear accountability

for operations. Because of this, manufacturing points could stay unnoticed till

clients react. Once they do happen, it takes longer to troubleshoot which

causes delays in resolving outages. Decision is delayed whereas ping ponging points

between groups in an effort to search out the accountable occasion, losing

everybodyís time as the problem bounces from group to group.

This downside just isn’t distinctive to microservice environments. At one

engagement, we witnessed related conditions with a monolith structure

missing clear possession for elements of the system. On this case, readability

of possession points stemmed from an absence of clear system boundaries in a

ďball of mudĒ monolith.

Ignoring the truth of distributed methods

A part of creating efficient methods is having the ability to outline and use

abstractions that allow us to simplify a posh system to the purpose

that it really suits within the developerís head. This permits builders to

make choices concerning the future modifications essential to ship new worth

and performance to the enterprise. Nonetheless, as in all issues, one can go

too far, not realizing that these simplifications are literally

assumptions hiding vital constraints which affect the system.

Riffing off the fallacies of distributed computing:

- The community just isn’t dependable.

- Your system is affected by the velocity of sunshine. Latency is rarely zero.

- Bandwidth is finite.

- The community just isn’t inherently safe.

- Topology all the time modifications, by design.

- The community and your methods are heterogeneous. Completely different methods behave

in a different way below load. - Your digital machine will disappear whenever you least anticipate it, at precisely the

incorrect time. - As a result of individuals have entry to a keyboard and mouse, errors will

occur. - Your clients can (and can) take their subsequent motion in <

500ms.

Fairly often, testing environments present excellent world

circumstances, which avoids violating these assumptions. Techniques which

donít account for (and check for) these real-world properties are

designed for a world through which nothing dangerous ever occurs. Because of this,

your system will exhibit unanticipated and seemingly non-deterministic

habits because the system begins to violate the hidden assumptions. This

interprets into poor efficiency for purchasers, and extremely troublesome

troubleshooting processes.

Not planning for potential visitors

Estimating future visitors quantity is troublesome, and we discover that we

are incorrect extra typically than we’re proper. Over-estimating visitors means

the group is losing effort designing for a actuality that doesnít

exist. Underneath-estimating visitors may very well be much more catastrophic. Surprising

excessive visitors masses might occur for quite a lot of causes, and a social media advertising

marketing campaign which unexpectedly goes viral is an effective instance. All of a sudden your

system canít handle the incoming visitors, parts begin to fall over,

and every little thing grinds to a halt.

As a startup, youíre all the time seeking to entice new clients and achieve

extra market share. How and when that manifests may be extremely

troublesome to foretell. On the scale of the web, something might occur,

and it’s best to assume that it’ll.

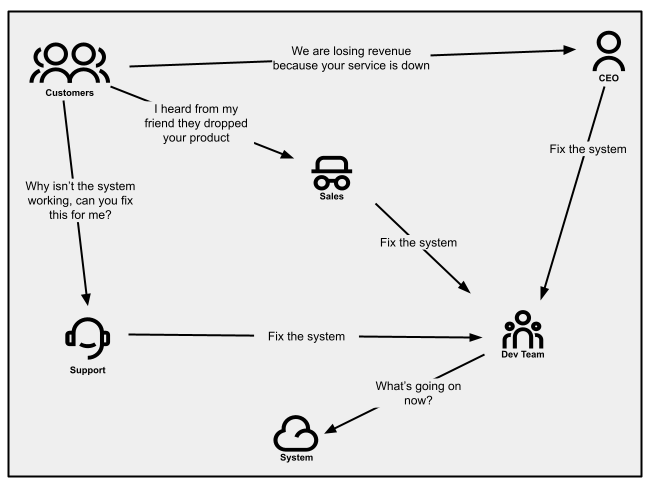

Alerted through buyer notifications

When clients are invested in your product and imagine the problem is

resolvable, they may attempt to contact your assist workers for

assist. Which may be by way of e-mail, calling in, or opening a assist

ticket. Service failures trigger spikes in name quantity or e-mail visitors.

Your gross sales individuals could even be relaying these messages as a result of

(potential) clients are telling them as properly. And if service outages

have an effect on strategic clients, your CEO may let you know instantly (this can be

okay early on, nevertheless itís definitely not a state you need to be in long run).

Buyer communications won’t all the time be clear and simple, however

fairly will likely be primarily based on a buyer’s distinctive expertise. If buyer success workers

don’t understand that these are indications of resilience issues,

they may proceed with enterprise as normal and your engineering workers will

not obtain the suggestions. Once they arenít recognized and managed

appropriately, notifications could then flip non-verbal. For instance, it’s possible you’ll

all of a sudden discover the speed at which clients are canceling subscriptions

will increase.

When working with a small buyer base, understanding about an issue

by way of your clients is ďprincipallyĒ manageable, as they’re pretty

forgiving (they’re on this journey with you in spite of everything). Nonetheless, as

your buyer base grows, notifications will start to pile up in direction of

an unmanageable state.

Determine 1:

Communication patterns as seen in a company the place buyer notifications

will not be managed properly.

How do you get out of the bottleneck?

After getting an outage, you need to get well as shortly as doable and

perceive intimately why it occurred, so you possibly can enhance your system and

guarantee it by no means occurs once more.

Tackling the resilience of your services and products whereas within the bottleneck

may be troublesome. Tactical options typically imply you find yourself caught in hearth after hearth.

Nonetheless if itís managed strategically, even whereas within the bottleneck, not

solely are you able to relieve the stress in your groups, however you possibly can be taught from previous restoration

efforts to assist handle by way of the hypergrowth stage and past.

The next 5 sections are successfully methods your group can implement.

We imagine they circulate so as and must be taken as a complete. Nonetheless, relying

in your group’s maturity, it’s possible you’ll determine to leverage a subset of

methods. Inside every, we lay out a number of options that work in direction of it is

respective technique.

Guarantee you may have carried out primary resilience methods

There are some primary methods, starting from structure to

group, that may enhance your resiliency. They hold your product

in the correct place, enabling your group to scale successfully.

Use a number of zones inside a area

For extremely vital companies (and their information), configure and allow

them to run throughout a number of zones. This could give a bump to your

system availability, and improve your resiliency within the case of

disruption (inside a zone).

Specify acceptable computing occasion varieties and specs

Enterprise vital companies ought to have computing capability

appropriately assigned to them. If companies are required to run 24/7,

your infrastructure ought to replicate these necessities.

Match funding to vital service tiers

Many organizations handle funding by figuring out vital

service tiers, with the understanding that not all enterprise methods

share the identical significance by way of delivering buyer expertise

and supporting income. Figuring out service tiers and related

resilience outcomes knowledgeable by service degree agreements (SLAs), paired with structure and

design patterns that assist the outcomes, offers useful guardrails

and governance in your product growth groups.

Clearly outline house owners throughout your complete system

Every service that exists inside your system ought to have

well-defined house owners. This data can be utilized to assist direct points

to the correct place, and to individuals who can successfully resolve them.

Implementing a developer portal which offers a software program companies

catalog with clearly outlined group possession helps with inside

communication patterns.

Automate guide resilience processes (inside a timebox)

Sure resilience issues which have been solved by hand may be

automated: actions like restarting a service, including new cases or

restoring database backups. Many actions are simply automated or just

require a configuration change inside your cloud service supplier.

Whereas within the bottleneck, implementing these capabilities can provide the

group the reduction it wants, offering a lot wanted respiration room and

time to unravel the foundation trigger(s).

Make sure that to maintain these implementations at their easiest and

timeboxed (couple of days at max). Keep in mind these began out as

bandaids, and automating them is simply one other (albeit higher) kind of

bandaid. Combine these into your monitoring answer, permitting you

to stay conscious of how often your system is routinely recovering and the way lengthy it

takes. On the similar time, these metrics assist you to prioritize

transferring away from reliance on these bandaid options and make your

entire system extra strong.

Enhance imply time to revive with observability and monitoring

To work your manner out of a bottleneck, it’s essential perceive your

present state so you can also make efficient choices about the place to take a position.

If you wish to be 5 nines, however don’t have any sense of what number of nines are

really presently supplied, then itís laborious to even know what path you

must be taking.

To know the place you might be, it’s essential spend money on observability.

Observability permits you to be extra proactive in timing funding in

resilience earlier than it turns into unmanageable.

Centralize your logs to be viewable by way of a single interface

Mixture logs from core companies and methods to be obtainable

by way of a central interface. This may hold them accessible to

a number of eyes simply and cut back troubleshooting efforts (probably

enhancing imply time to restoration).

Outline a transparent structured format for log messages

Anybody whoís needed to parse by way of aggregated log messages can inform

you that when a number of companies comply with differing log buildings itís

an unbelievable mess to search out something. Each service simply finally ends up

talking its personal language, and solely the unique authors perceive

the logs. Ideally, as soon as these logs are aggregated, anybody from

builders to assist groups ought to be capable to perceive the logs, no

matter their origin.

Construction the log messages utilizing an organization-wide standardized

format. Most logging instruments assist a JSON format as an ordinary, which

permits the log message construction to comprise metadata like timestamp,

severity, service and/or correlation-id. And with log administration

companies (by way of an observability platform), one can filter and search throughout these

properties to assist debug bottleneck points. To assist make search extra

environment friendly, favor fewer log messages with extra fields containing

pertinent data over many messages with a small variety of

fields. The precise messages themselves should be distinctive to a

particular service, however the attributes related to the log message

are useful to everybody.

Deal with your log messages as a key piece of knowledge that’s

seen to extra than simply the builders that wrote them. Your assist group can

turn into more practical when debugging preliminary buyer queries, as a result of

they’ll perceive the construction they’re viewing. If each service

can converse the identical language, the barrier to offer assist and

debugging help is eliminated.

Add observability thatís near your buyer expertise

What will get measured will get managed.

— Peter Drucker

Although infrastructure metrics and repair message logs are

helpful, they’re pretty low degree and donít present any context of

the precise buyer expertise. Then again, buyer

notifications are a direct indication of a difficulty, however they’re

often anecdotal and donít present a lot by way of sample (except

you set within the work to search out one).

Monitoring core enterprise metrics permits groups to watch a

buyerís expertise. Sometimes outlined by way of the productís

necessities and options, they supply excessive degree context round

many buyer experiences. These are metrics like accomplished

transactions, begin and cease charge of a video, API utilization or response

time metrics. Implicit metrics are additionally helpful in measuring a

buyerís experiences, like frontend load time or search response

time. It is essential to match what’s being noticed instantly

to how a buyer is experiencing your product. Additionally

essential to notice, metrics aligned to the client expertise turn into

much more essential in a B2B atmosphere, the place you may not have

the amount of information factors crucial to pay attention to buyer points

when solely measuring particular person parts of a system.

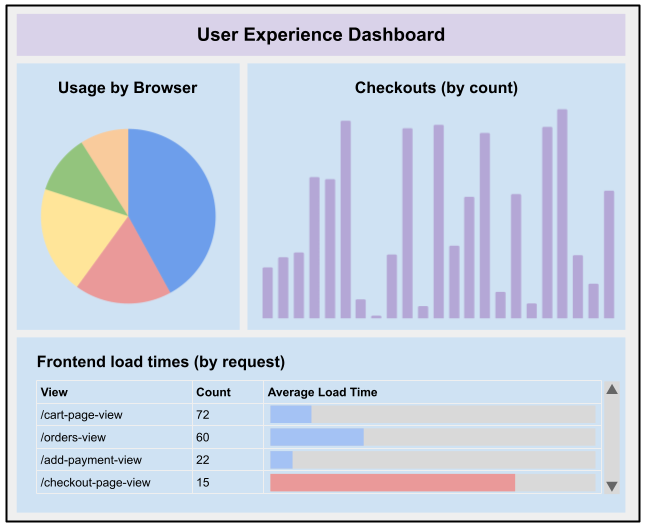

At one shopper, companies began to publish area occasions that

have been associated to the product expertise: occasions like added to cart,

failed so as to add to cart, transaction accomplished, fee accredited, and so on.

These occasions might then be picked up by an observability platform (like

Splunk, ELK or Datadog) and displayed on a dashboard, categorized and

analyzed even additional. Errors may very well be captured and categorized, permitting

higher downside fixing on errors associated to surprising buyer

expertise.

Determine 2:

Instance of what a dashboard specializing in the consumer expertise might appear like

Information gathered by way of core enterprise metrics will help you perceive

not solely what may be failing, however the place your system thresholds are and

the way it manages when itís outdoors of that. This offers additional perception into

the way you may get by way of the bottleneck.

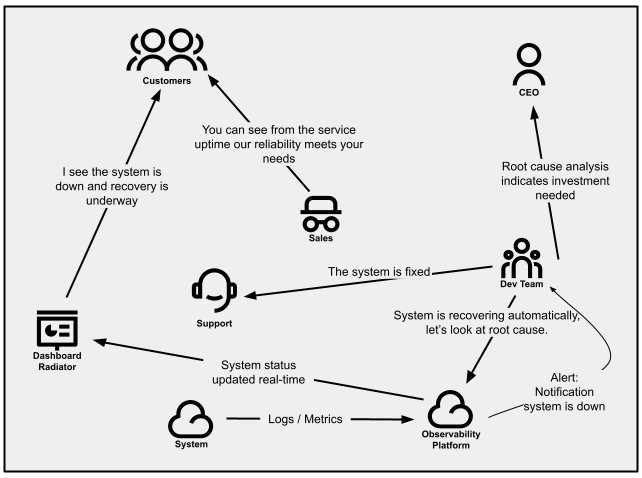

Present product standing perception to clients utilizing standing indicators

It may be troublesome to handle incoming buyer inquiries of

completely different points they’re dealing with, with assist companies shortly discovering

they’re combating hearth after hearth. Managing situation quantity may be essential

to a startup’s success, however inside the bottleneck, it’s essential search for

systemic methods of lowering that visitors. The power to divert name

visitors away from assist will give some respiration room and a greater probability to

resolve the correct downside.

Service standing indicators can present clients the data they’re

looking for with out having to achieve out to assist. This might are available in

the type of public dashboards, e-mail messages, and even tweets. These can

leverage backend service well being and readiness checks, or a mix

of metrics to find out service availability, degradation, and outages.

Throughout instances of incidents, standing indicators can present a manner of updating

many shoppers directly about your productís standing.

Constructing belief along with your clients is simply as essential as making a

dependable and resilient service. Offering strategies for purchasers to know

the companies’ standing and anticipated decision timeframe helps construct

confidence by way of transparency, whereas additionally giving the assist workers

the area to problem-solve.

Determine 3:

Communication patterns inside a company that proactively manages how clients are notified.

Shift to specific resilience enterprise necessities

As a startup, new options are sometimes thought-about extra invaluable

than technical debt, together with any work associated to resilience. And as said

earlier than, this definitely made sense initially. New options and

enhancements assist hold clients and herald new ones. The work to

present new capabilities ought to, in principle, result in a rise in

income.

This doesnít essentially maintain true as your group

grows and discovers new challenges to rising income. Failures of

resilience are one supply of such challenges. To maneuver past this, there

must be a shift in the way you worth the resilience of your product.

Perceive the prices of service failure

For a startup, the implications of not hitting a income goal

this ‘quarter’ may be completely different than for a scaleup or a mature

product. However as typically occurs, the preliminary “new options are extra

invaluable than technical debt” determination turns into a everlasting fixture within the

organizational tradition Ė whether or not the precise income affect is provable

or not; and even calculated. A side of the maturity wanted when

transferring from startup to scaleup is within the data-driven component of

decision-making. Is the group monitoring the worth of each new

characteristic shipped? And is the group analyzing the operational

investments as contributing to new income fairly than only a

cost-center? And are the prices of an outage or recurring outages identified

each by way of wasted inside labor hours in addition to misplaced income?

As a startup, in most of those regards, you have received nothing to lose.

However this isn’t true as you develop.

Due to this fact, itís essential to begin analyzing the prices of service

failures as a part of your total product administration and income

recognition worth stream. Understanding your income ďvelocityĒ will

present a straightforward option to quantify the direct cost-per-minute of

downtime. Monitoring the prices to the group for everybody concerned in an

outage incident, from buyer assist calls to builders to administration

to public relations/advertising and even to gross sales, may be an eye-opening expertise.

Add on the chance prices of coping with an outage fairly than

increasing buyer outreach or delivering new options and the true

scope and affect of failures in resilience turn into obvious.

Handle resilience as a characteristic

Begin treating resilience as greater than only a technical

expectation. Itís a core characteristic that clients will come to anticipate.

And since they anticipate it, it ought to turn into a firstclass

consideration amongst different options. A part of this evolution is about shifting the place the

accountability lies. As an alternative of it being purely a accountability for

tech, itís one for product and the enterprise. A number of layers inside

the group might want to think about resilience a precedence. This

demonstrates that resilience will get the identical quantity of consideration that

another characteristic would get.

Shut collaboration between

the product and know-how is important to be sure to’re in a position to

set the proper expectations throughout story definition, implementation

and communication to different elements of the group. Resilience,

although a core characteristic, remains to be invisible to the client (not like new

options like additions to a UI or API). These two teams have to

collaborate to make sure resilience is prioritized appropriately and

carried out successfully.

The target right here is shifting resilience from being a reactionary

concern to a proactive one. And in case your groups are in a position to be

proactive, you too can react extra appropriately when one thing

vital is occurring to your corporation.

Necessities ought to replicate life like expectations

Realizing life like expectations for resilience relative to

necessities and buyer expectations is vital to conserving your

engineering efforts value efficient. Completely different ranges of resilience, as

measured by uptime and availability, have vastly completely different prices. The

value distinction between ďthree ninesĒ and ď4 ninesĒ of availability

(99.9% vs 99.99%) could also be an element of 10x.

Itís essential to know your buyer necessities for every

enterprise functionality. Do you and your clients anticipate a 24x7x365

expertise? The place are your clients

primarily based? Are they native to a selected area or are they world?

Are they primarily consuming your service through cell units, or are

your clients built-in through your public API? For instance, it’s an

ineffective use of capital to offer 99.999% uptime on a service delivered through

cell units which solely get pleasure from 99.9% uptime resulting from cellphone

reliability limits.

These are essential inquiries to ask

when enthusiastic about resilience, since you donít need to pay for the

implementation of a degree of resiliency that has no perceived buyer

worth. In addition they assist to set and handle

expectations for the product being constructed, the group constructing and

sustaining it, the parents in your group promoting it and the

clients utilizing it.

Really feel out your issues first and keep away from overengineering

In the event youíre fixing resiliency issues by hand, your first intuition

may be to simply automate it. Why not, proper? Although it will possibly assist, it is most

efficient when the implementation is time-boxed to a really brief interval

(a few days at max). Spending extra time will probably result in

overengineering in an space that was really only a symptom.

A considerable amount of time, vitality and cash will likely be invested into one thing that’s

simply one other bandaid and most definitely just isn’t sustainable, and even worse,

causes its personal set of second-order challenges.

As an alternative of going straight to a tactical answer, that is an

alternative to actually really feel out your downside: The place do the fault strains

exist, what’s your observability attempting to let you know, and what design

selections correlate to those failures. You could possibly uncover these

fault strains by way of stress, chaos or exploratory testing. Use this

alternative to your benefit to find different system stress factors

and decide the place you will get the biggest worth in your funding.

As your corporation grows and scales, itís vital to re-evaluate

previous choices. What made sense in the course of the startup part could not get

you thru the hypergrowth phases.

Leverage a number of methods when gathering necessities

Gathering necessities for technically oriented options

may be troublesome. Product managers or enterprise analysts who will not be

versed within the nomenclature of resilience can discover it laborious to

perceive. This typically interprets into imprecise necessities like ďMake x service

extra resilientĒ or “100% uptime is our aim”. The necessities you outline are as

essential because the ensuing implementations. There are various methods

that may assist us collect these necessities.

Strive working a pre-mortem earlier than writing necessities. On this

light-weight exercise, people in several roles give their

views about what they suppose might fail, or what’s failing. A

pre-mortem offers invaluable insights into how people understand

potential causes of failure, and the associated prices. The following

dialogue helps prioritize issues that must be made resilient,

earlier than any failure happens. At a minimal, you possibly can create new check

eventualities to additional validate system resilience.

An alternative choice is to jot down necessities alongside tech leads and

structure SMEs. The accountability to create an efficient resilient system

is now shared amongst leaders on the group, and every can converse to

completely different facets of the design.

These two methods present that necessities gathering for

resilience options isnít a single accountability. It must be shared

throughout completely different roles inside a group. All through each approach you

strive, take into account who must be concerned and the views they bring about.

Evolve your structure and infrastructure to fulfill resiliency wants

For a startup, the design of the structure is dictated by the

velocity at which you will get to market. That always means the design that

labored at first can turn into a bottleneck in your transition to scaleup.

Your productís resilience will finally come right down to the know-how

selections you make. It could imply analyzing your total design and

structure of the system and evolving it to fulfill the product

resilience wants. A lot of what we spoke to earlier will help offer you

information factors and slack inside the bottleneck. Inside that area, you possibly can

evolve the structure and incorporate patterns that allow a really

resilient product.

Broadly have a look at your structure and decide acceptable trade-offs

Both implicitly or explicitly, when the preliminary structure was

created, trade-offs have been made. Through the experimentation and gaining

traction phases of a startup, there’s a excessive diploma of give attention to

getting one thing to market shortly, conserving growth prices low,

and having the ability to simply modify or pivot product path. The

trade-off is sacrificing the advantages of resilience

that might come out of your splendid structure.

Take an API backed by Capabilities as a Service (FaaS). This method is a good way to

create one thing with little to no administration of the infrastructure it

runs on, probably ticking all three packing containers of our focus space. On the

different hand, it is restricted primarily based on the infrastructure itís allowed to

run on, timing constraints of the service and the potential

communication complexity between many various features. Although not

unachievable, the constraints of the structure could make it

troublesome or complicated to realize the resilience your product wants.

Because the product and group grows and matures, its constraints

additionally evolve. Itís essential to acknowledge that early design choices

could not be acceptable to the present working atmosphere, and

consequently new architectures and applied sciences must be launched.

If not addressed, the trade-offs made early on will solely amplify the

bottleneck inside the hypergrowth part.

Improve resilience with efficient error restoration methods

Information gathered from displays can present the place excessive failure

charges are coming from, be it third-party integrations, backed-up queues,

backoffs or others. This information can drive choices on what are

acceptable restoration methods to implement.

Use caching the place acceptable

When retrieving data, caching methods will help in two

methods. Primarily, they can be utilized to cut back the load on the service by

offering cached outcomes for a similar queries. Caching may also be

used because the fallback response when a backend service fails to return

efficiently.

The trade-off is probably serving stale information to clients, so

be sure that your use case just isn’t delicate to stale information. For instance,

you wouldnít need to use cached outcomes for real-time inventory value

queries.

Use default responses the place acceptable

As a substitute for caching, which offers the final identified

response for a question, it’s doable to offer a static default worth

when the backend service fails to return efficiently. For instance,

offering retail pricing because the fallback response for a pricing

low cost service will do no hurt whether it is higher to threat dropping a sale

fairly than threat dropping cash on a transaction.

Use retry methods for mutation requests

The place a shopper is asking a service to impact a change within the information,

the use case could require a profitable request earlier than continuing. In

this case, retrying the decision could also be acceptable with the intention to reduce

how typically error administration processes must be employed.

There are some essential trade-offs to contemplate. Retries with out

delays threat inflicting a storm of requests which deliver the entire system

down below the load. Utilizing an exponential backoff delay mitigates the

threat of visitors load, however as an alternative ties up connection sockets ready

for a long-running request, which causes a special set of

failures.

Use idempotency to simplify error restoration

Purchasers implementing any kind of retry technique will probably

generate a number of equivalent requests. Make sure the service can deal with

a number of equivalent mutation requests, and may also deal with resuming a

multi-step workflow from the purpose of failure.

Design enterprise acceptable failure modes

In a system, failure is a given and your aim is to guard the tip

consumer expertise as a lot as doable. Particularly in circumstances which are

supported by downstream companies, you could possibly anticipate

failures (by way of observability) and supply an alternate circulate. Your

underlying companies that leverage these integrations may be designed

with enterprise acceptable failure modes.

Think about an ecommerce system supported by a microservice

structure. Ought to downstream companies supporting the ordering

operate turn into overwhelmed, it will be extra acceptable to

briefly disable the order button and current a restricted error

message to a buyer. Whereas this offers clear suggestions to the consumer,

Product Managers involved with gross sales conversions may as an alternative permit

for orders to be captured and alert the client to a delay so as

affirmation.

Failure modes must be embedded into upstream methods, in order to make sure

enterprise continuity and buyer satisfaction. Relying in your

structure, this may contain your CDN or API gateway returning

cached responses if requests are overloading your subsystems. Or as

described above, your system may present for an alternate path to

eventual consistency for particular failure modes. This can be a way more

efficient and buyer centered method than the presentation of a

generic error web page that conveys Ďone thing has gone incorrectí.

Resolve single factors of failure

A single service can simply go from managing a single

accountability of the product to a number of. For a startup, appending to

an present service is commonly the best method, because the

infrastructure and deployment path is already solved. Nonetheless,

companies can simply bloat and turn into a monolith, creating some extent of

failure that may deliver down many or all elements of the product. In circumstances

like this, you may want to know methods to separate up the structure,

whereas additionally conserving the product as a complete practical.

At a fintech shopper, throughout a hyper-growth interval, load

on their monolithic system would spike wildly. Because of the monolithic

nature, all the features have been introduced down concurrently,

leading to misplaced income and sad clients. The long-term

answer was to begin splitting the monolith into a number of separate

companies that may very well be scaled horizontally. As well as, they

launched occasion queues, so transactions have been by no means misplaced.

Implementing a microservice method just isn’t a easy and simple

activity, and does take effort and time. Begin by defining a site that

requires a resiliency increase, and extract it is capabilities piece by piece.

Roll out the brand new service, regulate infrastructure configuration as wanted (improve

provisioned capability, implement auto scaling, and so on) and monitor it.

Be certain that the consumer journey hasnít been affected, and resilience as

a complete has improved. As soon as stability is achieved, proceed to iterate over

every functionality within the area. As famous within the shopper instance, that is

additionally a chance to introduce architectural components that assist improve

the overall resilience of your system. Occasion queues, circuit breakers, bulkheads and

anti-corruption layers are all helpful architectural parts that

improve the general reliability of the system.

Frequently optimize your resilience

It is one factor to get by way of the bottleneck, it is one other to remain

out of it. As you develop, your system resiliency will likely be frequently

examined. New options end in new pathways for elevated system load.

Architectural modifications introduces unknown system stability. Your

group might want to keep forward of what’s going to ultimately come. Because it

matures and grows, so ought to your funding into resilience.

Often chaos check to validate system resilience

Chaos engineering is the bedrock of really resilient merchandise. The

core worth is the flexibility to generate failure in ways in which you may

by no means consider. And whereas that chaos is creating failures, working

by way of consumer eventualities on the similar time helps to know the consumer

expertise. This could present confidence that your system can face up to

surprising chaos. On the similar time, it identifies which consumer

experiences are impacted by system failures, giving context on what to

enhance subsequent.

Although it’s possible you’ll really feel extra comfy testing towards a dev or QA

atmosphere, the worth of chaos testing comes from manufacturing or

production-like environments. The aim is to know how resilient

the system is within the face of chaos. Early environments are (often)

not provisioned with the identical configurations present in manufacturing, thus

won’t present the arrogance wanted. Operating a check like

this in manufacturing may be daunting, so be sure to believe in

your capability to revive service. This implies all the system may be

spun again up and information may be restored if wanted, all by way of automation.

Begin with small comprehensible eventualities that can provide helpful information.

As you achieve expertise and confidence, think about using your load/efficiency

checks to simulate customers whilst you execute your chaos testing. Guarantee groups and

stakeholders are conscious that an experiment is about to be run, in order that they

are ready to watch (in case issues go incorrect). Frameworks like

Litmus or Gremlin can present construction to chaos engineering. As

confidence and maturity in your resilience grows, you can begin to run

experiments the place groups will not be alerted beforehand.

Recruit specialists with information of resilience at scale

Hiring generalists when constructing and delivering an preliminary product

is smart. Money and time are extremely invaluable, so having

generalists offers the pliability to make sure you will get out to

market shortly and never eat away on the preliminary funding. Nonetheless,

the groups have taken on greater than they’ll deal with and as your product

scales, what was as soon as adequate is not the case. A barely

unstable system that made it to market will proceed to get extra

unstable as you scale, as a result of the abilities required to handle it have

overtaken the abilities of the present group. In the identical vein as

technical

debt,

this could be a slippery slope and if not addressed, the issue will

proceed to compound.

To maintain the resilience of your product, youíll have to recruit

for that experience to give attention to that functionality. Specialists herald a

contemporary view on the system in place, together with their capability to

establish gaps and areas for enchancment. Their previous experiences can

have a two-fold impact on the group, offering a lot wanted steering in

areas that sorely want it, and an extra funding within the progress of

your staff.

At all times keep or enhance your reliability

In 2021, the State of Devops report expanded the fifth key metric from availability to reliability.

Underneath operational efficiency, it asserts a product’s capability to

retain its guarantees. Resilience ties instantly into this, because itís a

key enterprise functionality that may guarantee your reliability.

With many organizations pushing extra often to manufacturing,

there must be assurances that reliability stays the identical or will get higher.

Together with your observability and monitoring in place, guarantee what it

tells you matches what your service degree targets (SLOs) state. With each deployment to

manufacturing, the displays mustn’t deviate from what your SLAs

assure. Sure deployment buildings, like blue/inexperienced or canary

(to some extent), will help to validate the modifications earlier than being

launched to a large viewers. Operating checks successfully in manufacturing

can improve confidence that your agreements havenít swayed and

resilience has remained the identical or higher.

Resilience and observability as your group grows

Section 1

Experimenting

Prototype options, with hyper give attention to getting a product to market shortly

Section 2

Getting Traction

Resilience and observability are manually carried out through developer intervention

Prioritization for fixing resilience primarily comes from technical debt

Dashboards replicate low degree companies statistics like CPU and RAM

Majority of assist points are available in through calls or textual content messages from clients

Section 3

(Hyper) Development

Resilience is a core characteristic delivered to clients, prioritized in the identical vein as options

Observability is ready to replicate the general buyer expertise, mirrored by way of dashboards and monitoring

Re-architect or recreate problematic companies, enhancing the resilience within the course of

Section 4

Optimizing

Platforms evolve from inside dealing with companies, productizing observability and compute environments

Run periodic chaos engineering workouts, with little to no discover

Increase groups with engineers which are versed in resilience at scale

Abstract

As a scaleup, what determines your capability to successfully navigate the

hyper(progress) part is partially tied to the resilience of your

product. The excessive progress charge begins to place stress on a system that was

developed in the course of the startup part, and failure to deal with the resilience of

that system typically ends in a bottleneck.

To attenuate threat, resilience must be handled as a first-class citizen.

The small print could range in keeping with your context, however at a excessive degree the

following issues may be efficient:

- Resilience is a key characteristic of your product. It’s not only a

technical element, however a key element that your clients will come to anticipate,

shifting the corporate in direction of a proactive method. - Construct buyer standing indicators to assist divert some assist requests,

permitting respiration room in your group to unravel the essential issues. - The client expertise must be mirrored inside your observability stack.

Monitor core enterprise metrics that replicate experiences your clients have. - Perceive what your dashboards and displays are telling you, to get a way

of what are essentially the most vital areas to unravel. - Evolve your structure to fulfill your resiliency objectives as you establish

particular challenges. Preliminary designs may go at small scale however turn into

more and more limiting as you transition to a scaleup. - When architecting failure modes, discover methods to fail which are pleasant to the

client, serving to to make sure continuity and buyer satisfaction. - Outline life like resilience expectations in your product, and perceive the

limitations with which itís being served. Use this data to offer your

clients with efficient SLAs and cheap SLOs. - Optimize your resilience whenever youíre by way of the bottleneck. Make chaos

engineering a part of an everyday apply or recruiting specialists.

Efficiently incorporating these practices ends in a future group

the place resilience is constructed into enterprise targets, throughout all dimensions of

individuals, course of, and know-how.