We stay in a world the place numerous programs—social networks, monitoring, inventory exchanges, web sites, IoT units—all repeatedly generate volumes of knowledge within the type of occasions, captured in programs like Apache Kafka and Amazon Kinesis. One can carry out all kinds of analyses, like aggregations, filtering, or sampling, on these occasion streams, both on the report degree or over sliding time home windows. On this weblog, I’ll present how Rockset can serve a stay dashboard, which surfaces analytics on real-time Twitter knowledge ingested into Rockset from a Kinesis stream.

Organising a Kinesis Stream

The Python code snippet beneath exhibits tips on how to create a Kinesis stream programmatically. This may also be achieved via the AWS Console or the AWS CLI.

import boto3

kinesis = boto3.consumer('kinesis') # requires AWS credentials to be current in env

kinesis.create_stream(StreamName="twitter-stream", ShardCount=5)

Writing Tweets to Kinesis

Right here, I will probably be utilizing the Tweepy module to fetch tweets via a streaming search API. This API permits me to specify an inventory of phrases that I need to embody in my search (e.g. “music”, “fb”, “apple”). You must have a Twitter developer account so as to get entry to the Twitter Streaming API. Right here, I’ve a StreamListener, which is registered to be notified on a tweet arrival. Upon receiving a tweet, it writes the tweet knowledge to one of many 5 random shards of the Kinesis stream.

# twitter api credentials

access_token=...

access_token_secret=...

consumer_key=...

consumer_secret=...

class TweetListener(StreamListener):

def __init__(self, stream_name):

self.kinesis = boto3.consumer('kinesis')

self.stream_name = stream_name

def on_data(self, knowledge):

report = {}

report['Data'] = knowledge

report['PartitionKey'] = ''.be part of(random.selection(chars) for _ in vary(measurement))

self.kinesis.put_records(Information=[record], StreamName=self.stream_name)

auth=OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_token, access_token_secret)

stream=Stream(auth, TweetListener("twitter-stream"))

search_terms=["music", "facebook", "apple"]

stream.filter(observe=search_terms)

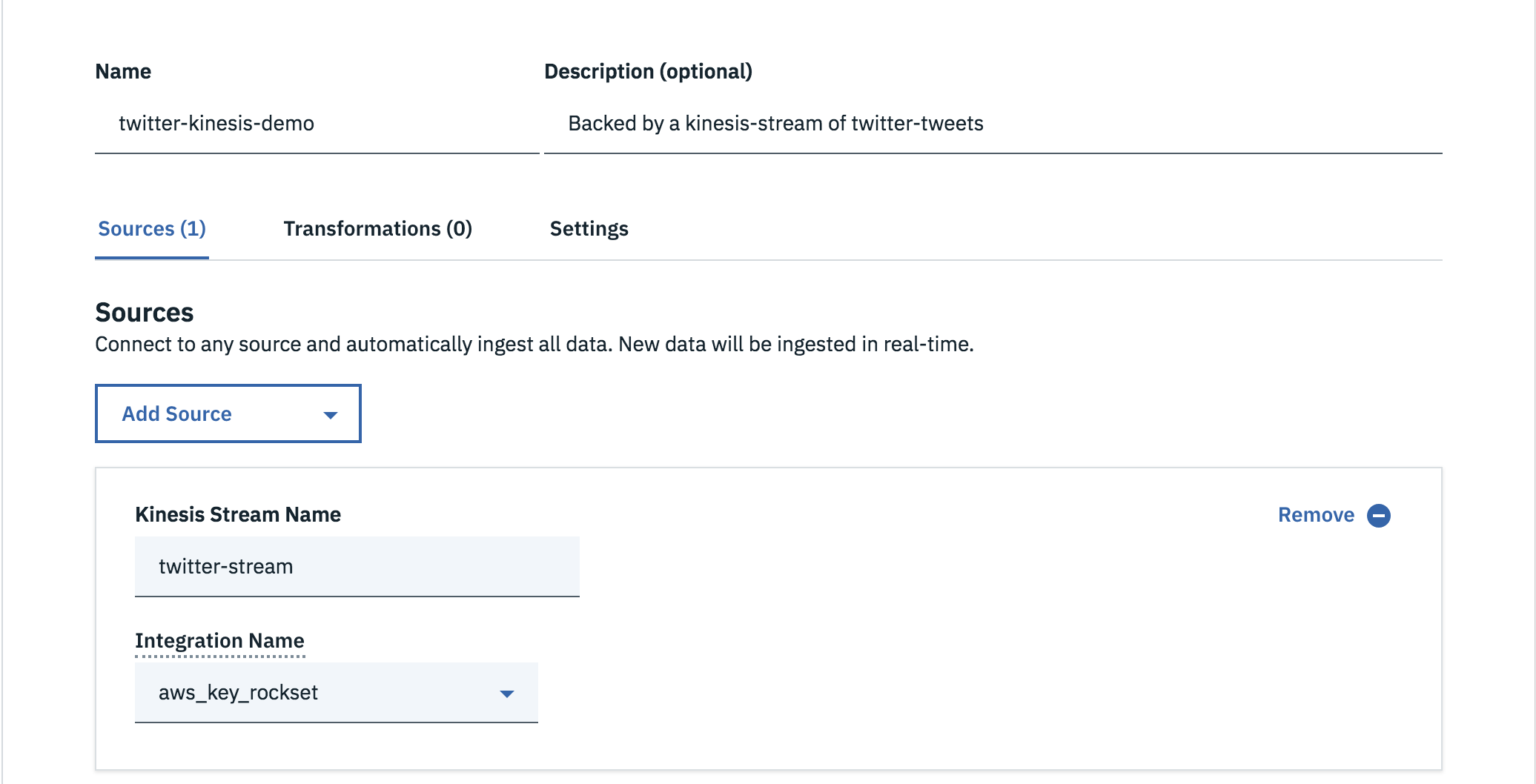

Connecting Kinesis to Rockset

The next snippet exhibits tips on how to create a group in Rockset, backed by a Kinesis stream. Observe: You must create an Integration (an object that represents your AWS credentials) and arrange related permissions on the Kinesis stream, which permits Rockset to carry out sure learn operations on that stream.

from rockset import Shopper, Q, F

rs=Shopper(api_key=...)

aws_integration=rs.Integration.retrieve(...)

sources=[

rs.Source.kinesis(

stream_name="twitter-stream",

integration=aws_integration)]

twitter_kinesis_demo=rs.Assortment.create("twitter-kinesis-demo", sources=sources)

Alternatively, collections may also be created from the Rockset console, as proven beneath.

Constructing the Dwell Dashboard

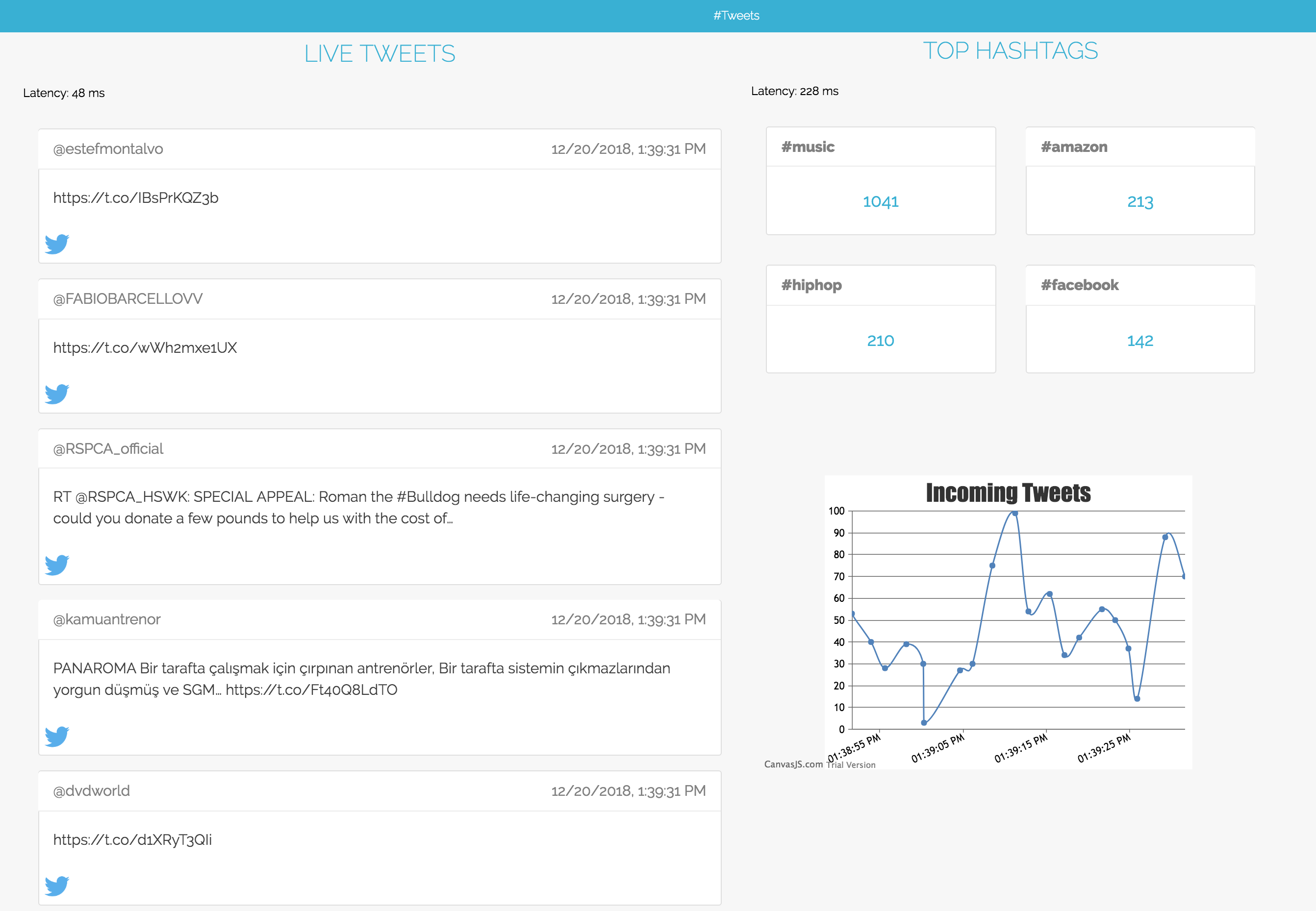

Now that I’ve a producer writing tweets to a Kinesis stream and a group to ingest them into Rockset, I can construct a dashboard on high of this assortment. My dashboard has two views.

Tweets View

The primary view shows analytics on all of the tweets coming into Rockset and has 3 panels, every of which makes its personal question to Rockset.

Dwell Tweets

The Dwell Tweets panel continually refreshes to indicate the newest tweets showing within the assortment. A question is made at a hard and fast refresh interval to fetch tweets that have been tweeted within the final minute. Right here, I’m choosing required fields to indicate on the feed and filtering out tweets older than a minute.

SELECT t.timestamp_ms,

t.created_at AS created_at,

t.textual content AS textual content,

t.consumer.screen_name AS screen_name

FROM "twitter-kinesis-demo" t

WHERE CAST(timestamp_ms AS INT) > UNIX_MILLIS(current_timestamp() - minutes(1))

ORDER BY timestamp_ms DESC

LIMIT 100;

Prime Hashtags

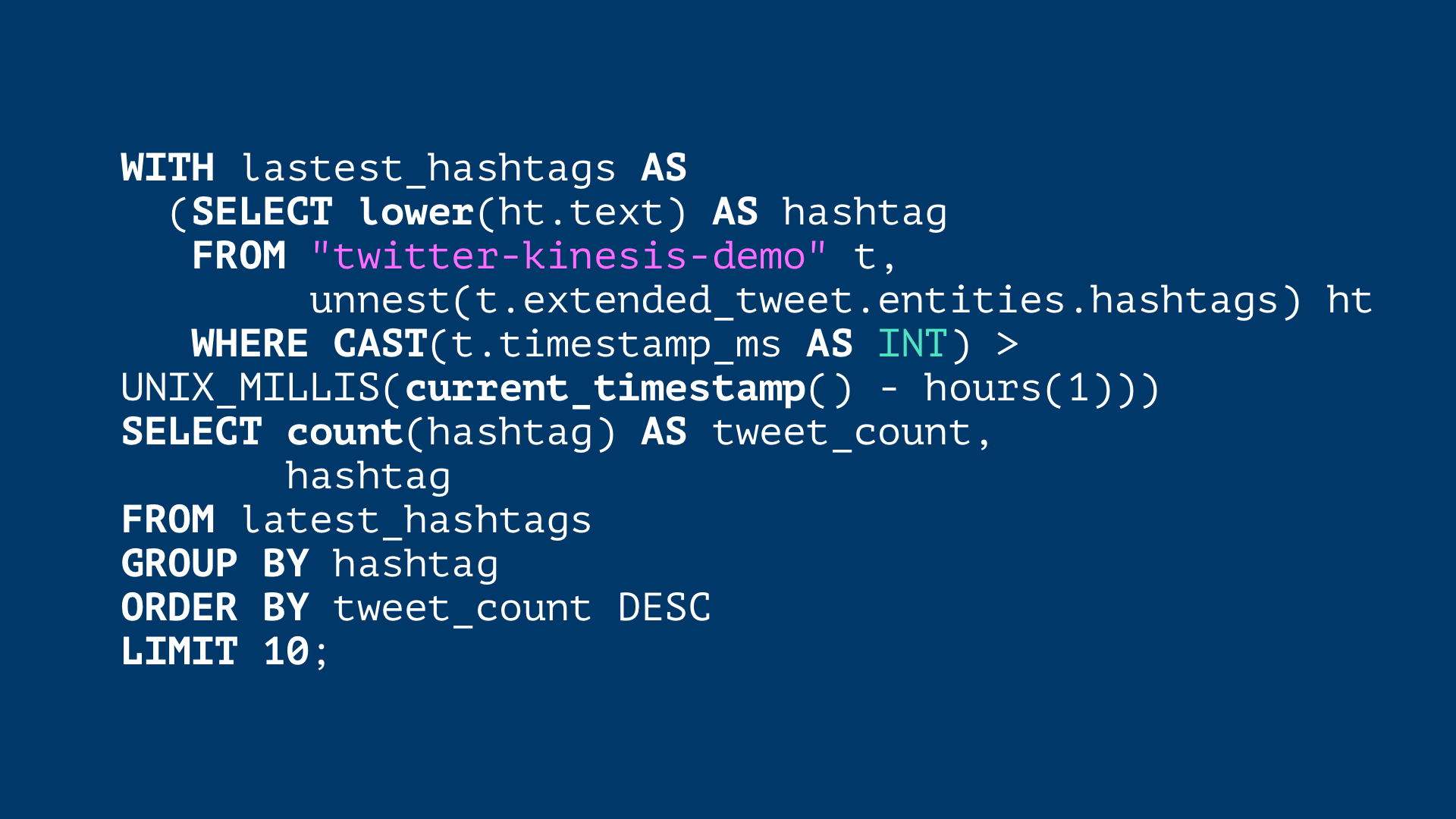

The Prime Hashtags panel exhibits trending hashtags, which have been present in most variety of tweets within the final hour, together with the related tweet depend. On this question, all hashtags showing within the final one hour are filtered into a short lived relation latest_hashtags. Utilizing a WITH clause, latest_hashtags is used it the principle question, the place we group by all of the hashtags and order by tweet_count to acquire the trending hashtags.

WITH lastest_hashtags AS

(SELECT decrease(ht.textual content) AS hashtag

FROM "twitter-kinesis-demo" t,

unnest(t.extended_tweet.entities.hashtags) ht

WHERE CAST(t.timestamp_ms AS INT) > UNIX_MILLIS(current_timestamp() - hours(1)))

SELECT depend(hashtag) AS tweet_count,

hashtag

FROM latest_hashtags

GROUP BY hashtag

ORDER BY tweet_count DESC

LIMIT 10;

Incoming Tweets

The final panel is a chart which exhibits the speed at which customers are tweeting. We get hold of knowledge factors for the variety of incoming tweets each 2 seconds and plot them in a chart.

SELECT depend(*)

FROM "twitter-kinesis-demo"

WHERE forged(timestamp_ms AS INT) > unix_millis(current_timestamp() - seconds(2));

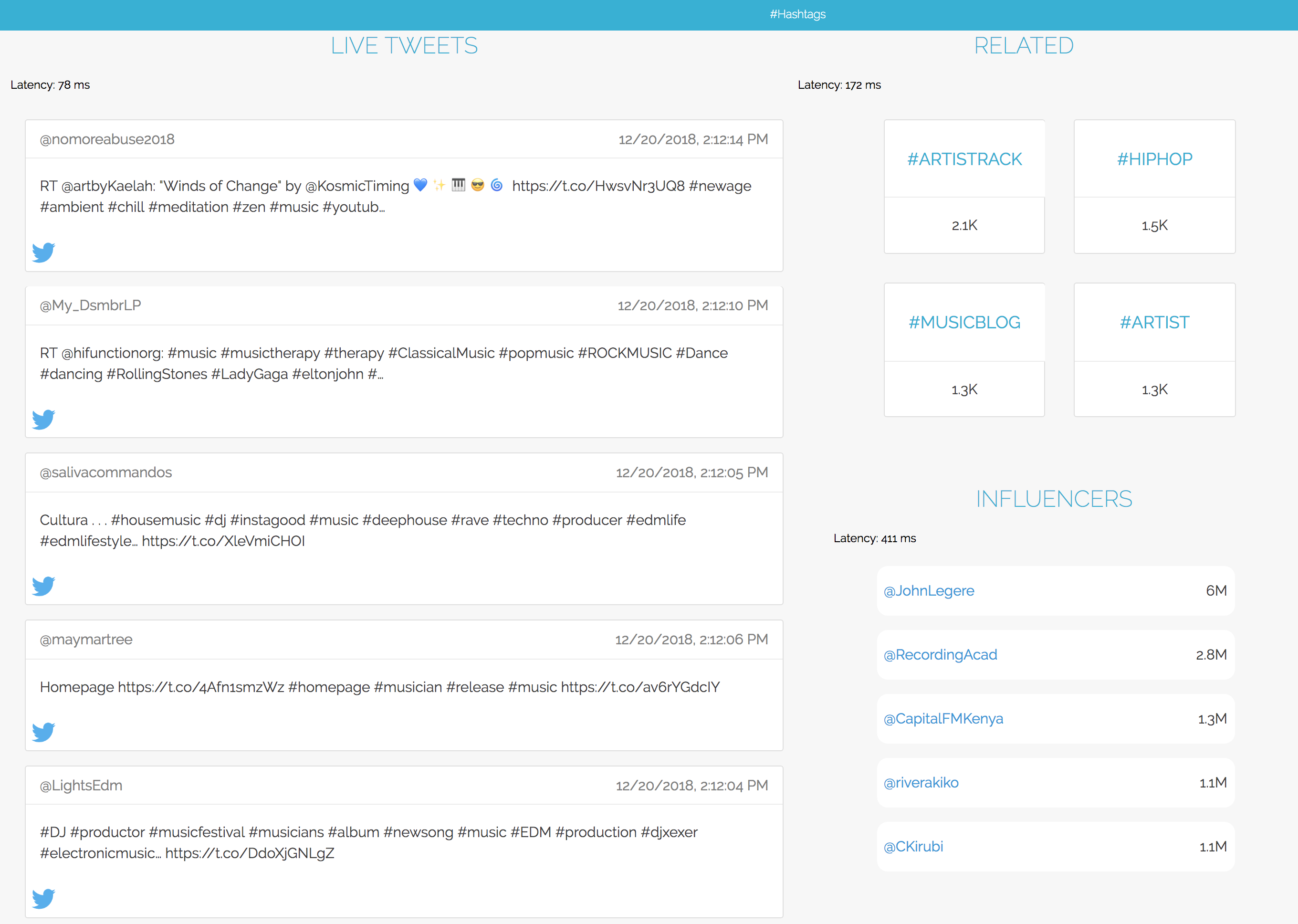

Hashtags View

The second view shows analytics on tweets with a particular hashtag and likewise has 3 panels: Dwell Tweets, Associated Hashtags, and Influencers. Every panel within the dashboard makes a question to Rockset. That is similar to the primary dashboard view however narrows the analytics to a specific hashtag of curiosity.

Influencers

As we have now narrowed our evaluation to a single hashtag, it could be attention-grabbing to see who probably the most influential customers are round this matter. For this, we outline influencers as customers with the very best variety of followers who’re tweeting the hashtag of curiosity.

SELECT t.consumer.screen_name,

t.consumer.followers_count AS fc

FROM "twitter-kinesis-demo" t

WHERE 'music' IN

(SELECT hashtags.textual content

FROM unnest(t.entities.hashtags) hashtags)

GROUP BY (t.consumer.screen_name,

t.consumer.followers_count)

ORDER BY t.consumer.followers_count DESC

LIMIT 5;

Associated Hashtags

This part is considerably much like the Prime Hashtags panel we noticed within the Tweets view of the dashboard. It exhibits the hashtags that co-occur most frequently together with our hashtag of curiosity.

SELECT hashtags.textual content as hashtag,

depend(*) AS occurence_count

FROM "twitter-kinesis-demo" t,

unnest(t.entites.hashtags) hashtags

WHERE 'music' IN

(SELECT ht.textual content

FROM unnest(t.entities.hashtags) ht)

AND hashtags.textual content != 'music'

GROUP BY hashtags.textual content

ORDER BY occurence_count DESC

LIMIT 10;

Dwell Tweets

The Dwell Tweets panel is similar to one we noticed within the Tweets view of the dashboard. The one distinction is a brand new filter is utilized so as to choose these tweets which include our hashtag of curiosity. I already used this filter for the opposite two panels within the Hashtags view.

The place to Go from Right here

Whereas I created this instance stay dashboard for example how real-time analytics may very well be carried out on knowledge from Kinesis streams, Rockset helps Kafka, as a streaming supply, and customary visualization instruments, like Tableau, Apache Superset, Redash, and Grafana, as nicely.

You may discuss with the total supply code for this instance right here, in case you are fascinated with constructing on streaming knowledge utilizing Rockset and Kinesis. Comfortable constructing!