Product suggestions are a core function of the trendy buyer expertise. When customers return to a web site with which they’ve beforehand interacted, they anticipate to be greeted by suggestions associated to these prior interactions that assist pickup the place they left off. When customers interact a selected merchandise, they anticipate comparable, related alternate options to be steered to assist them discover simply the best merchandise to satisfy their wants. And as gadgets are positioned in a cart, customers anticipate further merchandise to be steered that full and improve your total buying expertise. When carried out proper, these product suggestions not solely facilitate the buying journey however depart the client feeling acknowledged and understood by the retail outlet.

Whereas there are numerous totally different approaches to producing product suggestions, most suggestion engines in use immediately depend on historic patterns of interplay between merchandise and clients, discovered by way of the applying of refined methods utilized to giant collections of retailer-specific information. These engines are surprisingly sturdy at reinforcing patterns discovered from profitable buyer engagements, however typically we have to break from these historic patterns so as to ship a special expertise.

Take into account the state of affairs the place a brand new product has been launched the place there may be solely a restricted variety of interactions inside our information. Recommenders requiring information discovered from quite a few buyer engagements could fail to recommend the product till ample information is constructed as much as assist a suggestion.

Or take into account one other state of affairs the place a single product attracts an inordinate quantity of consideration. On this state of affairs, the recommender runs the chance of falling into the entice of all the time suggesting this one merchandise attributable to its overwhelming recognition to the detriment of different viable merchandise within the portfolio.

To keep away from these and different comparable challenges, retailers would possibly incorporate a tactic that employs widely-recognized patterns of product affiliation primarily based on frequent information. Very like a useful gross sales affiliate, this sort of recommender might look at the gadgets a buyer appears to have an curiosity in and recommend further gadgets that appear to align with the trail or paths these product mixtures could point out.

Utilizing a Massive Language Mannequin to Make Suggestions

Take into account the state of affairs the place a buyer retailers for winter scarves, beanies and mittens. Clearly, this buyer is gearing up for a chilly climate outing. Let’s say the retailer has just lately launched heavy wool socks and winter boots into their product portfolio. The place different recommenders won’t but choose up on the affiliation of these things with these the client is searching due to an absence of interactions within the historic information, frequent information hyperlinks these things collectively.

This type of information is commonly captured by giant language fashions (LLMs), educated on giant volumes of common textual content. In that textual content, mittens and boots could be immediately linked by people placing on each gadgets earlier than venturing outdoor and related to ideas like “chilly”, “snow” and “winter” that strengthen the connection and attract different associated gadgets.

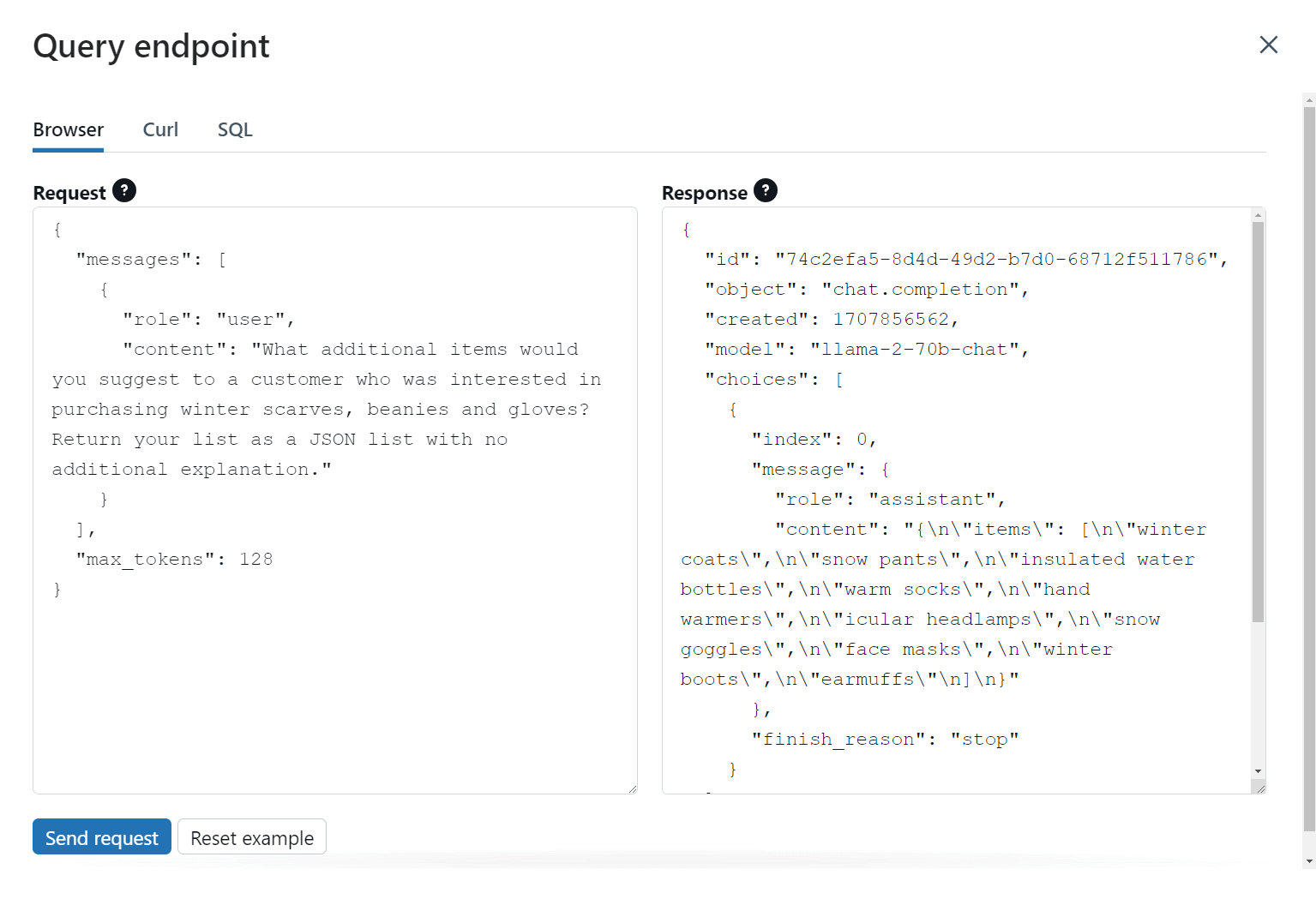

When the LLM is then requested what different gadgets could be related to a shawl, beanie and mittens, all of this data, captured in billions of inside parameters, is used to recommend a prioritized checklist of further gadgets which can be probably of curiosity. (Determine 1)

The fantastic thing about this strategy is that we aren’t restricted to asking the LLM to think about simply the gadgets within the cart in isolation. We would acknowledge {that a} buyer purchasing for these winter gadgets in south Texas could have a sure set of preferences that differ from a buyer buying these identical gadgets in northern Minnesota and incorporate that geographic info into the LLM’s immediate. We would additionally incorporate details about promotional campaigns or occasions to encourage the LLM to recommend gadgets related to these efforts. Once more, very similar to a retailer affiliate, the LLM can steadiness quite a lot of inputs to reach at a significant however nonetheless related set of suggestions.

Connecting the Suggestions with Obtainable Merchandise

However how will we relate the overall product solutions supplied by the LLM again to the particular gadgets in our product catalog? LLMs educated on publicly obtainable datasets don’t sometimes have information of the particular gadgets in a retailer’s product portfolio, and coaching such a mannequin with retailer-specific info is each time-consuming and cost-prohibitive.

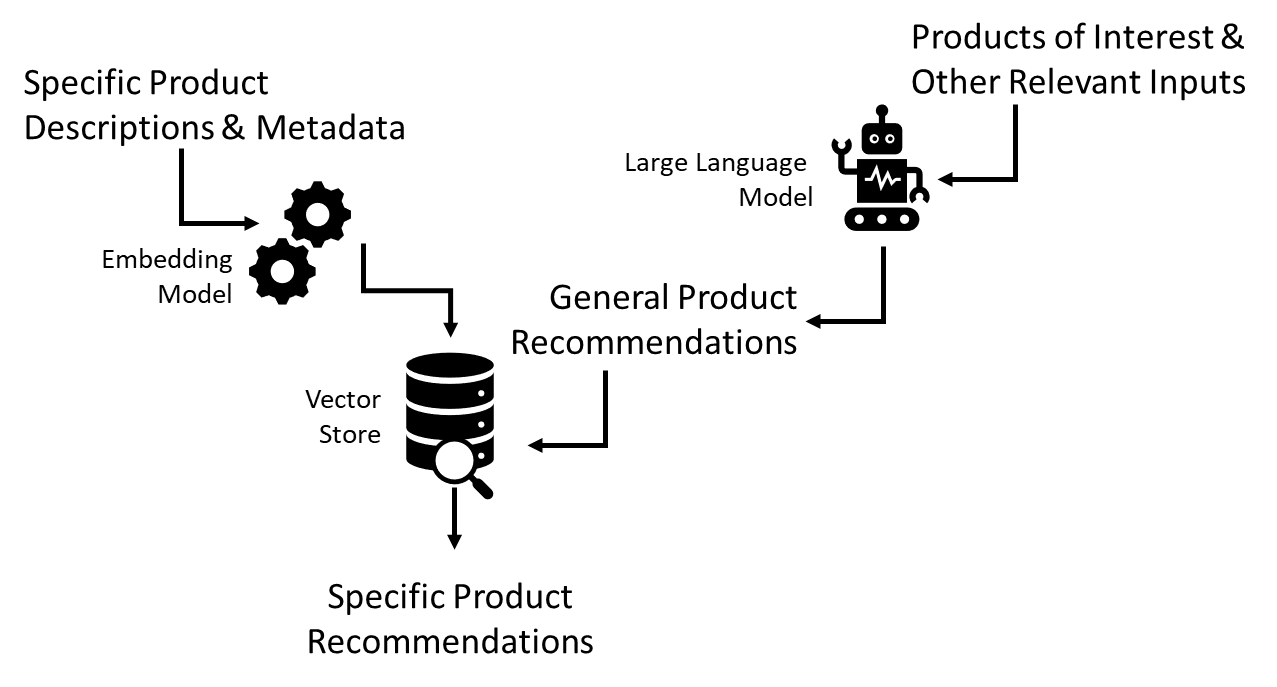

The answer to this downside is comparatively easy. Utilizing a light-weight embedding mannequin, akin to one of many many freely obtainable open supply fashions obtainable on-line, we will translate the descriptive info and different metadata for every of our merchandise into what are generally known as embeddings. (Determine 2)

[ -1.41311243e-01, 4.90943342e-02, 2.61841211e-02, 6.41700476e-02, …, -3.52126663e-03 ]

The idea of an embedding will get a little bit technical, however in a nutshell, it’s a numerical illustration of the textual content and the way it maps a set of acknowledged ideas and relationships discovered inside a given language. Two gadgets conceptually just like each other akin to the overall winter boots and the particular Acme Troopers that enable a wearer to tromp by way of snowy metropolis streets or alongside mountain paths within the consolation of waterproof canvas and leather-based uppers to resist winter’s worst would have very comparable numerical representations when handed by way of an applicable LLM. If we calculate the mathematical distinction (distance) between the embeddings related to every merchandise, we’d discover there can be comparatively little separation between them. This might point out these things are intently associated.

To place this idea into motion, all we’d must do is convert all of our particular product descriptions and metadata into embeddings and retailer these in a searchable index, what’s sometimes called a vector retailer. Because the LLM makes common product suggestions, we’d then translate every of those into embeddings of their very own and search the vector retailer for essentially the most intently associated gadgets, offering us particular gadgets in our portfolio to position in entrance of our buyer. (Determine 3)

Bringing the Resolution Along with Databricks

The recommender sample offered right here is usually a welcome addition to the suite of recommenders utilized by organizations in eventualities the place common information of product associations might be leveraged to make helpful solutions to clients. To get the answer off the bottom, organizations will need to have the flexibility to entry a big language mannequin in addition to a light-weight embedding mannequin and convey collectively the performance of each of those with their very own, proprietary info. As soon as that is carried out, the group wants the flexibility to show all of those property into an answer which may simply be built-in and scaled throughout the vary of customer-facing interfaces the place these suggestions are wanted.

By way of the Databricks Information Intelligence Platform, organizations can deal with every of those challenges by way of a single, constant, unified setting that makes implementation and deployment straightforward and value efficient whereas retaining information privateness. With Databricks’ new Vector Search functionality, builders can faucet into an built-in vector retailer with surrounding workflows that make sure the embeddings housed inside it are updated. By way of the brand new Basis Mannequin APIs, builders can faucet into a variety of open supply and proprietary giant language fashions with minimal setup. And thru enhanced Mannequin Serving capabilities, the end-to-end recommender workflow might be packaged for deployment behind an open and safe endpoint that allows integration throughout the widest vary of contemporary functions.

However don’t simply take our phrase for it. See it for your self. In our latest answer accelerator, we now have constructed an LLM-based product recommender implementing the sample proven right here and demonstrating how these capabilities might be introduced collectively to go from idea to operationalized deployment. All of the code is freely obtainable, and we invite you to discover this answer in your setting as a part of our dedication to serving to organizations maximize the potential of their information.

Obtain the notebooks