Microsoft Safety Copilot, additionally known as Copilot for Safety, can be basically availability beginning April 1, the corporate introduced right now. Microsoft revealed that pricing for Safety Copilot will begin at $4/hr, calculated based mostly on utilization.

At a press briefing on March 7 on the Microsoft Expertise Heart in New York (Determine A), we noticed how Microsoft positions Safety Copilot as a manner for safety personnel to get real-time help with their work and pull information from throughout Microsoft’s suite of safety companies.

Microsoft Safety Copilot availability and pricing

Safety Copilot was first introduced in March 2023, and basic early entry opened in October 2023. Common availability can be worldwide, and the Safety Copilot consumer interface is available in 25 totally different languages. Safety Copilot can course of prompts and reply in eight totally different languages.

Safety Copilot can be offered by means of a consumptive pricing mannequin, with clients paying based mostly on their wants. Utilization can be damaged down into Safety Compute Models. Prospects can be billed month-to-month for the variety of SCUs provisioned hourly on the price of $4 per hour with a minimal of 1 hour of use. Microsoft frames this as a approach to permit customers to begin experimenting with Safety Copilot after which scale up as wanted.

How Microsoft Safety Copilot assists safety professionals

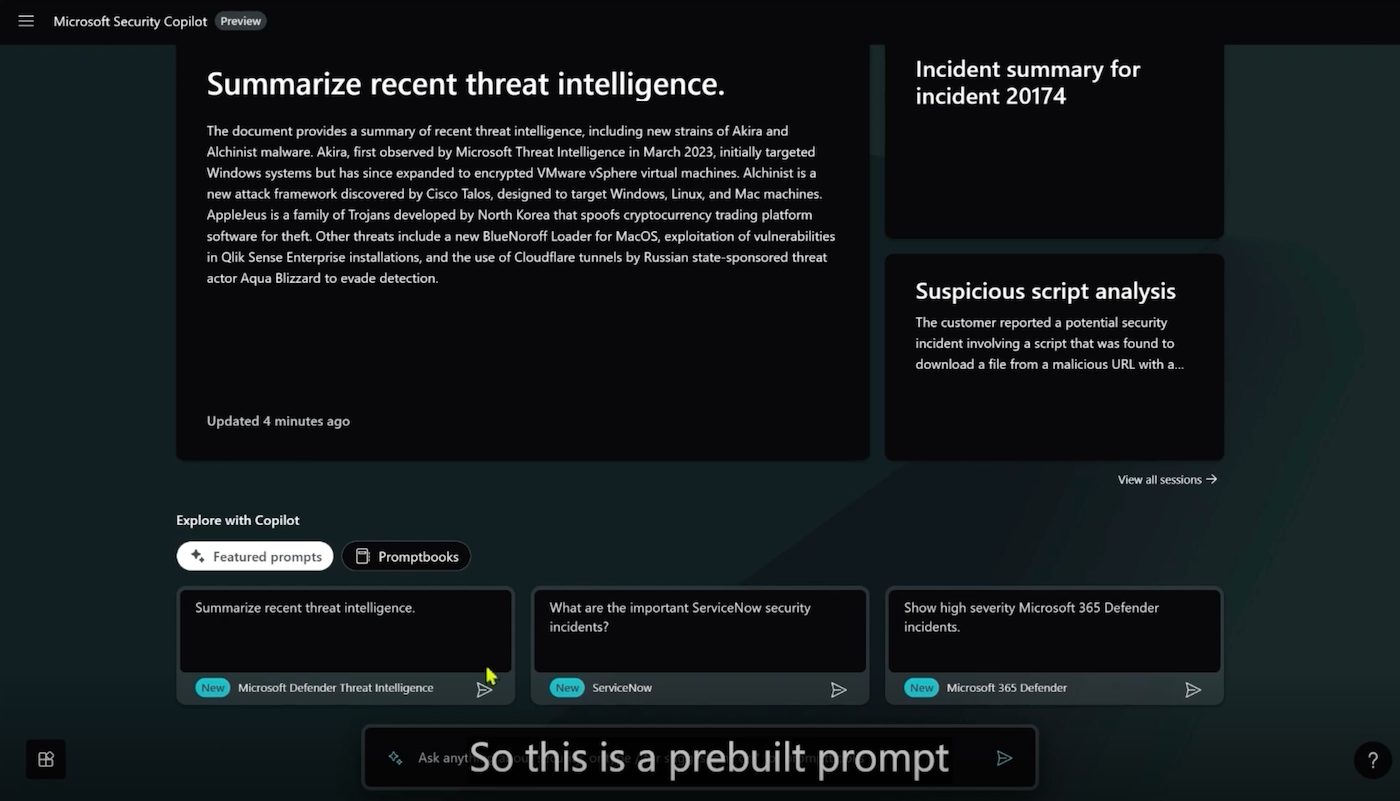

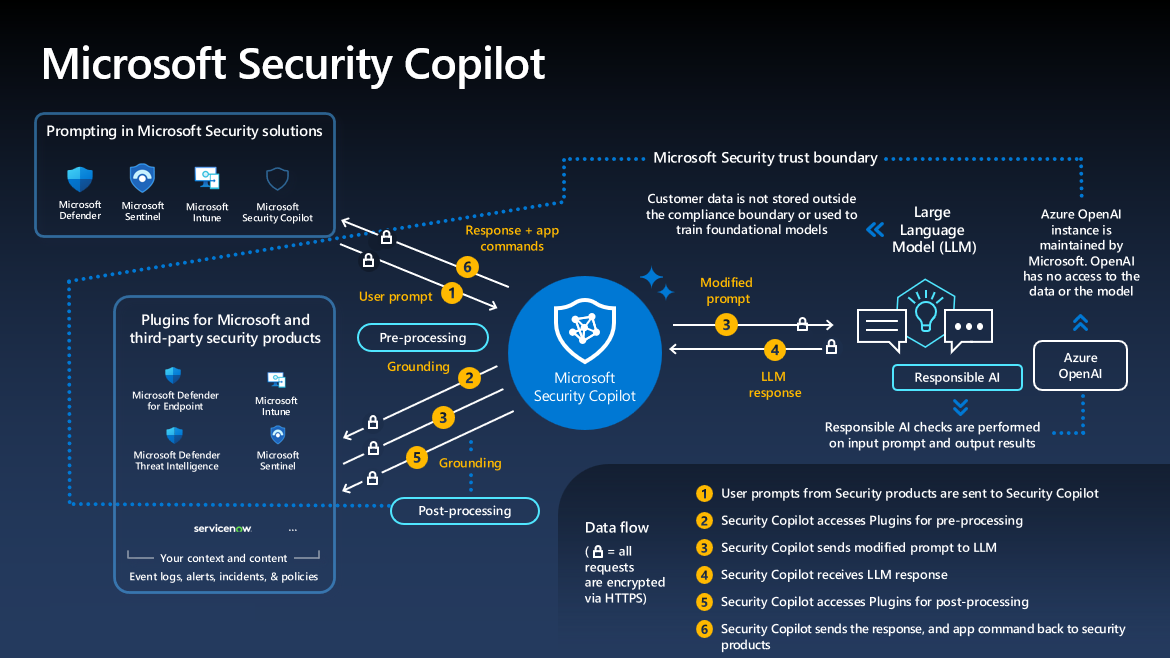

Safety Copilot can work as a standalone software drawing information from many various sources (Determine B) or as an embedded chat window inside different Microsoft safety companies.

Safety Copilot supplies options on what a safety analyst would possibly do subsequent based mostly on a dialog or incident report.

Placing AI within the palms of cybersecurity professionals helps defend towards attackers who function in a neighborhood of “ransomware as a gig financial system,” Jakkal stated.

What differentiates Microsoft Safety Copilot from opponents, Jakkal stated, is it attracts from ChatGPT and it could possibly use information from throughout huge numbers of related Microsoft purposes.

“We course of 78 trillion alerts, which is our new quantity (in comparison with earlier information), and so all these alerts are happening, what we name grounding the safety. And with out these alerts, you possibly can’t actually have a gen (generative) AI instrument, as a result of it must know these connections — it must know the trail,” Jakkal stated.

Jakkal identified that Microsoft is investing $20 billion in safety over 5 years, along with separate AI investments.

SEE: NIST up to date its Cybersecurity Framework in February, including a brand new space of focus: governance. (TechRepublic)

One advantage of Safety Copilot’s conversational expertise is that it could possibly write incident stories in a short time, and range these stories to be kind of technical relying on the worker they’re supposed for, Microsoft representatives stated.

“To me, Copilot for Safety is an absolute sport changer for an govt as a result of it permits them a abstract (of safety incidents). A abstract of the dimensions that you really want,” stated Sherrod DeGrippo, director of risk intelligence technique at Microsoft.

Safety Copilot’s capacity to tailor stories helps CISOs bridge the technical and govt worlds, stated DeGrippo.

“My scorching take is that CISOs are a distinct breed of govt suite particular person,” stated DeGrippo. “They need depth. They wish to get technical. They wish to have their palms in there. And so they wish to even have the flexibility to maneuver by means of these govt circles because the skilled. They wish to be their very own skilled after they discuss to the board, after they discuss to their CEO, no matter it could be, their CFO.”

Learnings from Safety Copilot non-public preview and early entry

Principal Product Supervisor for Copilot for Safety Naadia Sayed stated that, in the course of the non-public preview and open entry durations, companions instructed Microsoft which APIs they wished to connect with Safety Copilot. Prospects with customized APIs discovered it particularly helpful that Safety Copilot might hook up with these APIs. In the course of the preview durations, companions have been capable of tweak Safety Copilot to their group’s particular workflows, prompts and eventualities.

The non-public preview began with utilizing the Copilot generative AI assistant for safety operations duties, Jakkal instructed TechRepublic. From there, clients requested for Copilot integration with different expertise — identity-related duties, for instance.

“We’re additionally seeing however the place they wish to use our safety instruments for governance of AI as properly,” Jakkal stated.

For instance, clients wished to ensure that one other instrument reminiscent of ChatGPT wasn’t sharing nonpublic firm data reminiscent of salaries (Determine C).

“One thing that we’re discovering is that folks have increasingly more of an urge for food for risk intelligence to assist direct their useful resource utilization,” stated DeGrippo. “We’re seeing clients make useful resource selections, reminiscent of: understanding risk precedence permits them to say we have to put extra folks and focus and time in these explicit areas. And getting that degree of useful resource utilization, prioritization and effectivity has made clients actually completely satisfied. And so we’re ensuring that they’ve these instruments.”