Companies usually must combination matters as a result of it’s important for organizing, simplifying, and optimizing the processing of streaming information. It permits environment friendly evaluation, facilitates modular improvement, and enhances the general effectiveness of streaming purposes. For instance, if there are separate clusters, and there are matters with the identical function within the totally different clusters, then it’s helpful to combination the content material into one matter.

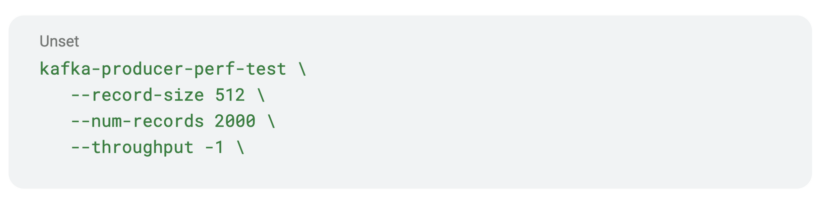

This weblog put up walks you thru how you should utilize prefixless replication with Streams Replication Supervisor (SRM) to combination Kafka matters from a number of sources. To be particular, we shall be diving deep right into a prefixless replication situation that entails the aggregation of two matters from two separate Kafka clusters into a 3rd cluster.

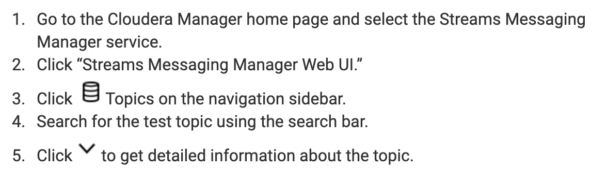

This tutorial demonstrates how one can arrange the SRM service for prefixless replication, how one can create and replicate matters with Kafka and SRM command line (CLI) instruments, and how one can confirm your setup utilizing Streams Messaging Manger (SMM). Safety setup and different superior configurations usually are not mentioned.

Earlier than you start

The next tutorial assumes that you’re conversant in SRM ideas like replications and replication flows, replication insurance policies, the essential service structure of SRM, in addition to prefixless replication. If not, you’ll be able to take a look at this associated weblog put up. Alternatively, you’ll be able to examine these ideas in our SRM Overview.

Situation overview

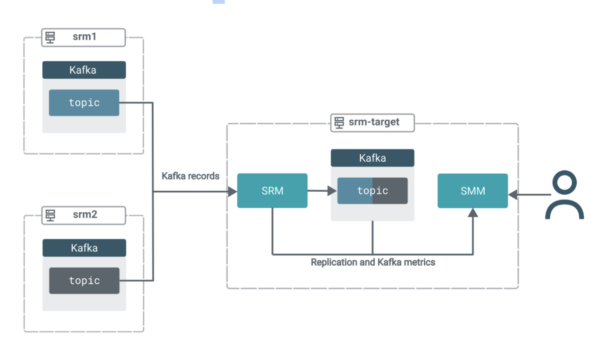

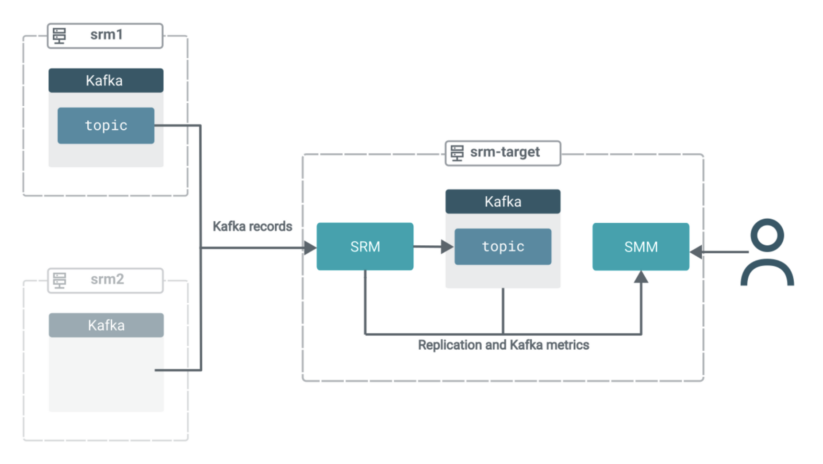

On this situation you will have three clusters. All clusters comprise Kafka. Moreover, the goal cluster (srm-target) has SRM and SMM deployed on it.

The SRM service on srm-target is used to tug Kafka information from the opposite two clusters. That’s, this replication setup shall be working in pull mode, which is the Cloudera-recommended structure for SRM deployments.

In pull mode, the SRM service (particularly the SRM driver function cases) replicates information by pulling from their sources. So relatively than having SRM on supply clusters pushing the info to focus on clusters, you employ SRM situated on the goal cluster to tug the info into its co-located Kafka cluster.Pull mode is really helpful as it’s the deployment kind that was discovered to supply the very best quantity of resilience towards varied timeout and community instability points. You will discover a extra in-depth rationalization of pull mode in the official docs.

The information from each supply matters shall be aggregated right into a single matter on the goal cluster. All of the whereas, it is possible for you to to make use of SMM’s highly effective UI options to observe and confirm what’s occurring.

Arrange SRM

First, you’ll want to arrange the SRM service situated on the goal cluster.

SRM must know which Kafka clusters (or Kafka companies) are targets and which of them are sources, the place they’re situated, the way it can join and talk with them, and the way it ought to replicate the info. That is configured in Cloudera Supervisor and is a two-part course of. First, you outline Kafka credentials, then you definitely configure the SRM service.

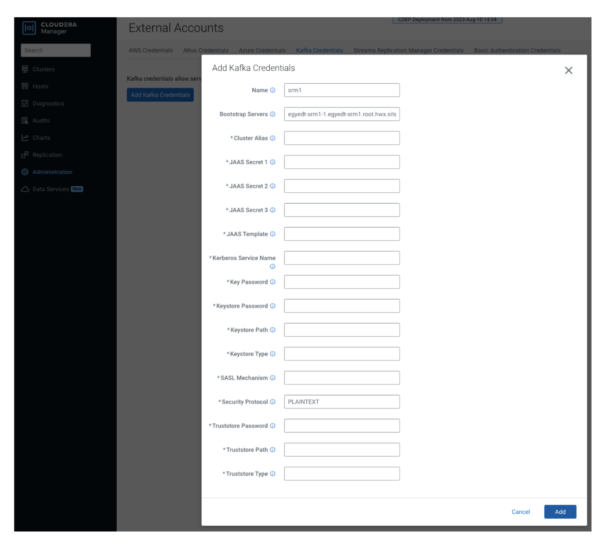

Outline Kafka credentials

You outline your supply (exterior) clusters utilizing Kafka Credentials. A Kafka Credential is an merchandise that comprises the properties required by SRM to ascertain a reference to a cluster. You may consider a Kafka credential because the definition of a single cluster. It comprises the title (alias), deal with (bootstrap servers), and credentials that SRM can use to entry a selected cluster.

- In Cloudera supervisor, go to the Administration > Exterior Accounts > Kafka Credentials web page.

- Click on “Add Kafka Credentials.”

- Configure the credential.

The setup on this tutorial is minimal and unsecure, so that you solely must configure Identify, Bootstrap Servers, and Safety Protocol traces. The safety protocol on this case is PLAINTEXT.

4. Click on “Add” when you’re achieved, and repeat the earlier step for the opposite cluster (srm2).

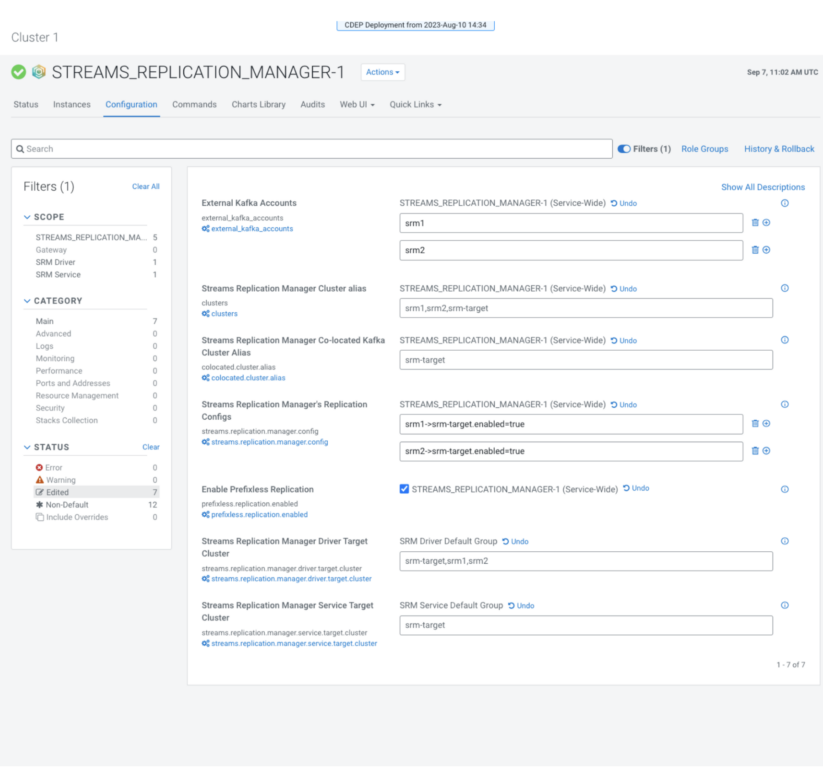

Configure the SRM service

After the credentials are arrange, you’ll must configure varied SRM service properties. These properties specify the goal (co-located) cluster, inform SRM what replications needs to be enabled, and that replication ought to occur in prefixless mode. All of that is achieved on the configuration web page of the SRM service.

1. From the Cloudera Supervisor dwelling web page, choose the “Streams Replication Supervisor” service.

2. Go to “Configuration.”

3. Specify the co-located cluster alias with “Streams Replication Supervisor Co-located Kafka Cluster Alias.”

The co-located cluster alias is the alias (quick title) of the Kafka cluster that SRM is deployed along with. All clusters in an SRM deployment have aliases. You utilize the aliases to seek advice from clusters when configuring properties and when operating the srm-control device. Set this to:

Discover that you just solely must specify the alias of the co-located Kafka cluster, getting into connection data such as you did for the exterior clusters isn’t ended. It is because Cloudera Supervisor passes this data robotically to SRM.

4. Specify Exterior Kafka Accounts.

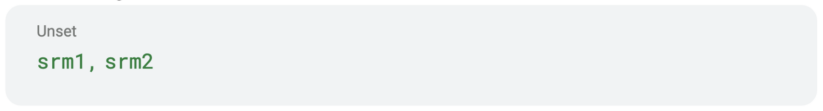

This property should comprise the names of the Kafka credentials that you just created in a earlier step. This tells SRM which Kafka credentials it ought to import to its configuration. Set this to:

5. Specify all cluster aliases with “Streams Replication Supervisor Cluster” alias.

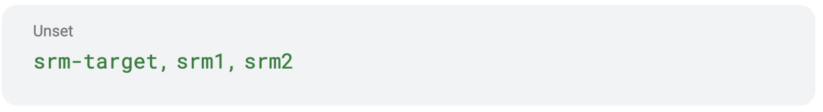

The property comprises a comma-delimited listing of all cluster aliases. That’s, all aliases you beforehand added to the Streams Replication Supervisor Co-located Kafka Cluster Alias and Exterior Kafka Accounts properties. Set this to:

6. Specify the driving force function goal with Streams Replication Supervisor Driver Goal Cluster.

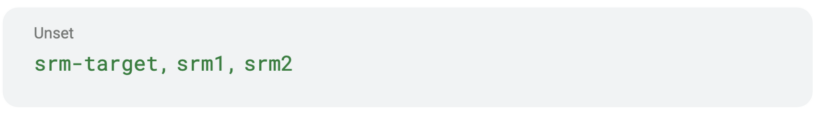

The property comprises a comma-delimited listing of all cluster aliases. That’s, all aliases you beforehand added to the Streams Replication Supervisor Co-located Kafka Cluster Alias and Exterior Kafka Accounts properties. Set this to:

7. Specify service function targets with Streams Replication Supervisor Service Goal Cluster.

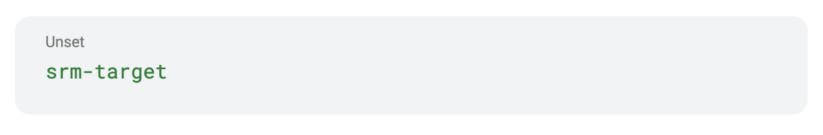

This property specifies the cluster that the SRM service function will collect replication metrics from (i.e. monitor). In pull mode, the service roles should at all times goal their co-located cluster. Set this to:

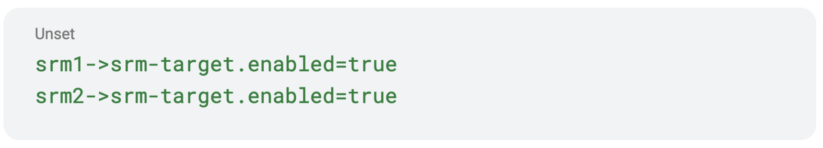

8. Specify replications with Streams Replication Supervisor’s Replication Configs.

This property is a jack-of-all-trades and is used to set many SRM properties that aren’t immediately accessible in Cloudera Supervisor. However most significantly, it’s used to specify your replications. Take away the default worth and add the next:

9. Choose “Allow Prefixless Replication”

This property permits prefixless replication and tells SRM to make use of the IdentityReplicationPolicy, which is the ReplicationPolicy that replicates with out prefixes.

10. Evaluate your configuration, it ought to appear to be this:

13. Click on “Save Modifications” and restart SRM.

Create a subject, produce some information

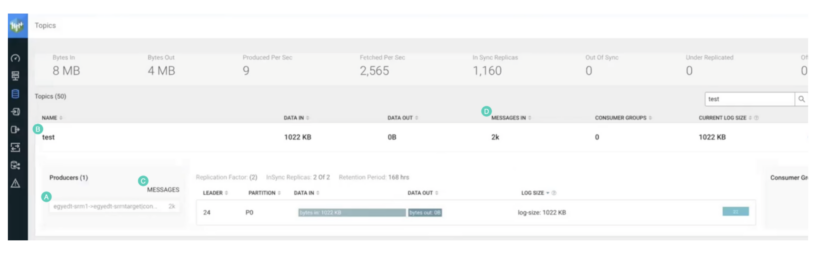

Now that SRM setup is full, you’ll want to create certainly one of your supply matters and produce some information. This may be achieved utilizing the kafka-producer-perf-test CLI device.

This device creates the subject and produces the info in a single go. The device is accessible by default on all CDP clusters, and will be known as immediately by typing its title. No must specify full paths.

- Utilizing SSH, log in to certainly one of your supply cluster hosts.

- Create a subject and produce some information.

Discover that the device will produce 2000 information. This shall be necessary in a while once we confirm replication on the SMM UI.

Replicate the subject

So, you will have SRM arrange, and your matter is prepared. Let’s replicate.

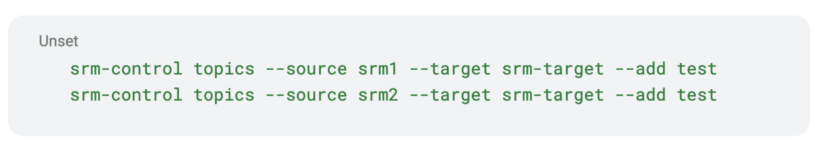

Though your replications are arrange, SRM and the supply clusters are related, information isn’t flowing, the replication is inactive. To activate replication, you’ll want to use the srm-control CLI device to specify what matters needs to be replicated.

Utilizing the device you’ll be able to manipulate the replication to permit and deny lists (or matter filters), which management what matters are replicated. By default, no matter is replicated, however you’ll be able to change this with just a few easy instructions.

- Utilizing SSH, log in to the goal cluster (srm-target).

- Run the next instructions to start out replication.

Discover that despite the fact that the subject on srm2 doesn’t exist but, we added the subject to the replication permit listing as properly. The subject shall be created later. On this case, we’re activating its replication forward of time.

Insights with SMM

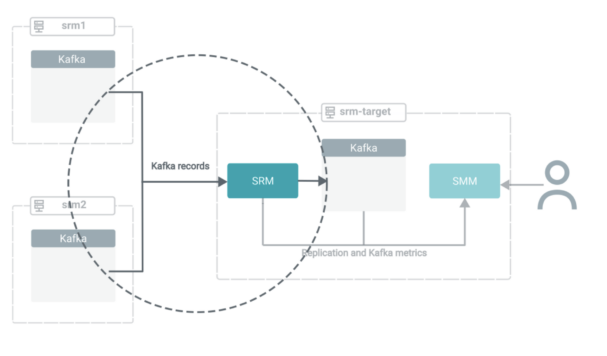

Now that replication is activated, the deployment is within the following state:

Within the subsequent few steps, we’ll shift the main focus to SMM to display how one can leverage its UI to achieve insights into what is definitely occurring in your goal cluster.

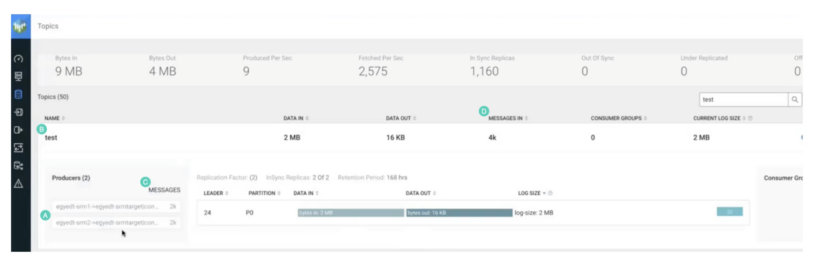

Discover the next:

- The title of the replication is included within the title of the producer that created the subject. The -> notation means replication. Subsequently, the subject was created with replication.

- The subject title is similar as on the supply cluster. Subsequently, it was replicated with prefixless replication. It doesn’t have the supply cluster alias as a prefix.

- The producer wrote 2,000 information. This is similar quantity of information that you just produced within the supply matter with kafka-producer-perf-test.

- “MESSAGES IN” reveals 2,000 information. Once more, the identical quantity that was initially produced.

On to aggregation

After efficiently replicating information in a prefixless trend, its time transfer ahead and combination the info from the opposite supply cluster. First you’ll must arrange the check matter within the second supply cluster (srm2), because it doesn’t exist but. This matter will need to have the very same title and configurations because the one on the primary supply cluster (srm1).

To do that, you’ll want to run kafka-producer-perf-test once more, however this time on a bunch of the srm2 cluster. Moreover, for bootstrap you’ll must specify srm2 hosts.

Discover how solely the bootstraps are totally different from the primary command. That is essential, the matters on the 2 clusters should be an identical in title and configuration. In any other case, the subject on the goal cluster will continually swap between two configuration states. Moreover, if the names don’t match, aggregation is not going to occur.

After the producer is completed with creating the subject and producing the 2000 information, the subject is instantly replicated. It is because we preactivated replication of the check matter in a earlier step. Moreover, the subject information are robotically aggregated into the check matter on srm-target.

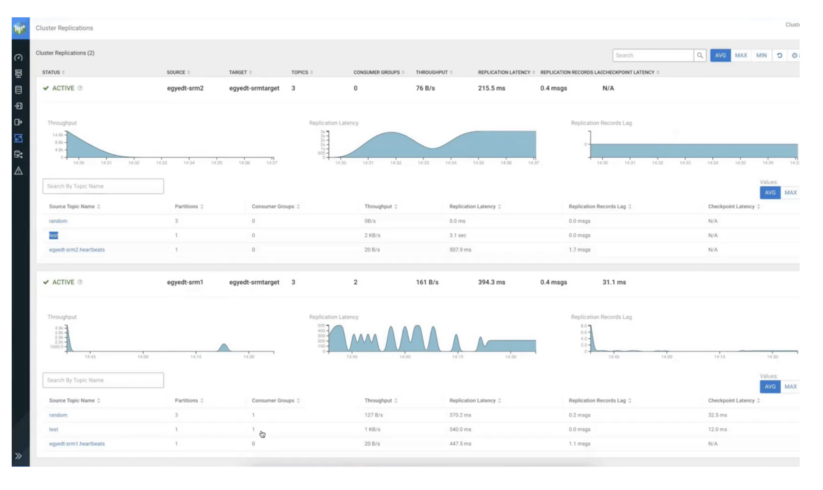

You may confirm that aggregation has occurred by taking a look on the matter within the SMM UI.

The next signifies that aggregation has occurred:

- There are actually two producers as an alternative of 1. Each comprise the title of the replication. Subsequently, the subject is getting information from two replication sources.

- The subject title continues to be the identical. Subsequently, perfixless replication continues to be working.

- Each producers wrote 2,000 information every.

- “MESSAGES IN” reveals 4,000 information.

Abstract

Abstract

On this weblog put up we checked out how you should utilize SRM’s prefixless replication function to combination Kafka matters from a number of clusters right into a single goal cluster.

Though aggregation was in focus, observe that prefixless replication can be utilized for non-aggregation kind replication eventualities as properly. For instance, it’s the excellent device emigrate that previous Kafka deployment operating on CDH, HDP, or HDF to CDP.

If you wish to be taught extra about SRM and Kafka in CDP Personal Cloud Base, jump over to Cloudera’s doc portal and see Streams Messaging Ideas, Streams Messaging How Tos, and/or the Streams Messaging Migration Information.

To get arms on with SRM, obtain Cloudera Stream Processing Group version right here.

Concerned about becoming a member of Cloudera?

At Cloudera, we’re engaged on fine-tuning large information associated software program bundles (primarily based on Apache open-source tasks) to supply our clients a seamless expertise whereas they’re operating their analytics or machine studying tasks on petabyte-scale datasets. Examine our web site for a check drive!

In case you are focused on large information, want to know extra about Cloudera, or are simply open to a dialogue with techies, go to our fancy Budapest workplace at our upcoming meetups.

Or, simply go to our careers web page, and turn into a Clouderan!